Amazon Web Services has announced Lambda containers that support Java since mid 2015. Lambda provides the Amazon Linux build of openjdk 1.8 which means that Lambda fully supports running all languages that can be compiled and run on the Java Virtual Machine. There are many popular languages that use JVM such as Scala, Groovy and Kotlin.

So, let’s try to run Kotlin on a Lambda function.

I picked Kotlin as a sample for this POC because of the ease to set up, wide adoption of developers for mobile, server and even client (front-end) programming. On the official page it is stated that Kotlin is designed with Java Interoperability in mind, so we can further test how some of the existing Java libraries perform with Kotlin in an AWS Lambda function.

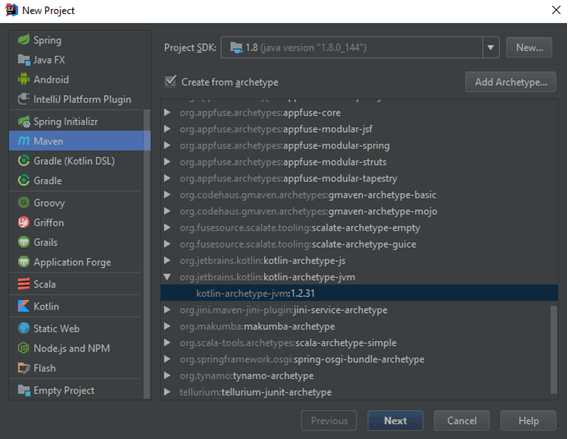

For this example we will use project templating (archetypes) – that help us to quickly set up our first Kotlin project. First we create a new maven project and we select an archetype.

- File -> New -> Project

- Maven -> Create from archetype -> org.jetbrains.kotlin:kotlin-archetype-jvm -> Next

- Follow the instructions and pick a project name

- Create src/main/kotlin/app/Main.kt

When selecting an archetype please make sure to select the JVM kotlin archetype, since Kotlin can be transpiled and run on JavaScript environments as well. Kotlin compiles to both JVM bytecode and JavaScript.

After setting up your project add the following dependency to your pom.xml file.

<dependency>

<groupId>com.sparkjava</groupId>

<artifactId>spark-core</artifactId>

<version>2.7.2</version>

</dependency> We’ll be using the Spark microframework for this example. Spark framework is similar to NodeJS – Express and I find it really good for prototyping and quickly developing versatile solutions on short notice.

Please note that the Spark-Kotlin library is still in an early alpha release so instead we will use the Spark-Java library and since Java and Kotlin are interoperable, that one will work too.

We need one more thing in order to make our Spark application work on a Lambda function. AWS Lambda apps expect one type of events, while Spark expects different types of request. That is why AWS have created a “bridge” used to transform Lambda events into Spark requests.

We will add the aws dependencies for container initialisation and Spark integration to the pom.xml file.

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-lambda-java-core</artifactId>

<version>${java-core.version}</version>

</dependency>

<dependency>

<groupId>com.amazonaws.serverless</groupId>

<artifactId>aws-serverless-java-container-spark</artifactId>

<version>1.0.1</version>

</dependency>In our example we will create a Handler class that returns a RequestHandler.

We override the handleRequest method that receives the request when the lambda is started.

There we define the routes and forward the request and the context to the Spark Lambda container handler.

Note that before forwarding the request to SparkLambdaContainerHandler we need to check whether the routes have already been defined and if they have not been defined, then we redefine them because Lambda functions usually shut down after 5 minutes and they restart again as soon as they receive a request.

You can grab the code from the GitHub

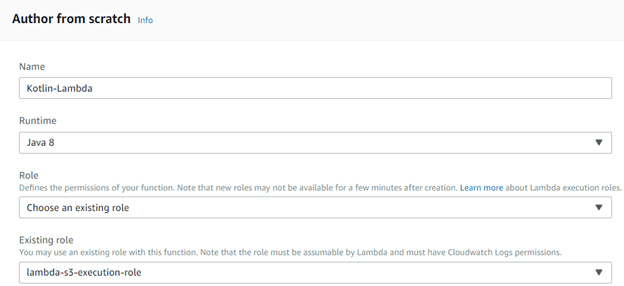

After that we package our code using maven package, create a Lambda function and upload the code.

Run: mvn package in order to package the code, and after that sign into the AWS console and create a Lambda function.

Make sure that when you are creating the Lambda function you pick the Java8 runtime. After creating the lambda function, go to your Lambda function and set the appropriate handler.

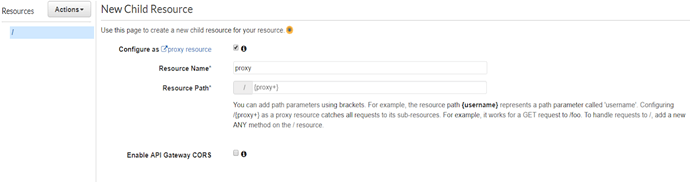

Finally we need to create an API using ApiGateway. Simply click on the CreateApi button and follow the setup instructions by AWS to create your API.

After that go to Actions -> Create Resource -> Select proxy+ resource

Selecting a proxy+ resource will forward all request coming to ApiGateway to the lambda function and the Spark Router will take care of all the routing and responses.

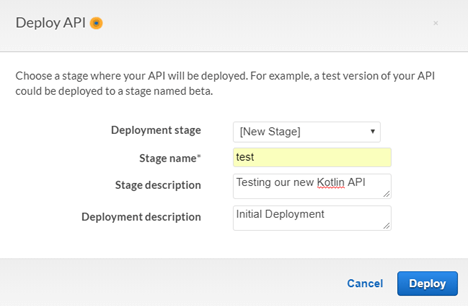

After that go to actions and from the dropdown select “Deploy API”. After creating a new stage, deploy your api.

You can find your URL under stages -> Invoke URL. Finally, make a simple get request using your browser to test whether everything works.

In order to test this example you can send two requests:

GET /greetings

GET /greetings/name

With a large number of well-tested libraries that Java offers and the modularity and fast-paced development of Kotlin and Spark you can quickly prototype apps and deploy them on Lambda.

With Lambda you pay per request and each month you get one million requests free which makes Lambda a cost-effective way of running your web apps.