A Step-by-Step introductory guide for creating an Azure cloud native GenerativeAI solution specialized for bioengineering academic insights using internal microbiome data.

After so many years, I get the impression that the AI hype is much more real today than ever. Technically speaking, the end of last year (November 30, 2022, and the first official release of ChatGPT) may have triggered the public awareness of the power and potential of applied artificial intelligence. Interestingly, the revolution in the field of Natural Language Processing (NLP) has resulted in a series of transformations that are tectonically shaking the IT industry and many other areas and industries in a broader sense and domain of work. After only one year since the launch of the well-known ChatGPT, many things and perspectives no longer look the same. I think that the technology industry is entering in a transformation period that will be much more intense and dynamic than ever before. I will come back and write about the history of applied artificial intelligence, and the ancestors of today’s popular large language models (LLMs) in one of the upcoming posts.

In the meantime, like any AI enthusiast, I actively follow the current trends related to the possibilities of exploiting large language models in various industries and business domains. More specifically, I have an excellent opportunity for practical hands-on related to the design and implementation of Retrieval Augmented Generation (RAG) solutions based on applying knowledge and cognitive reasoning power to large language models. Moreover, the work on several projects involves using LLMs in on-premises and cloud environments in different technology lines and programming languages. Such a challenge inspired me to write this introductory article from where I will begin to share my experience and perspectives related to the applied AI and Generative AI to create cutting-edge applications that will most likely be the basis of the next-gen of technological achievements.

As a software engineer and architect coming mainly with a Microsoft background, I will open the series by presenting the potential and opportunities offered by the Azure OpenAI service. I will begin with a generalized overview of the practical application, followed by articles that will further elaborate on the various components separately.

What is Azure OpenAI?

Azure OpenAI is a cloud service that Microsoft provided because of their partnership with OpenAI. It combines Azure’s enterprise-grade capabilities with OpenAI’s generative AI model capabilities. It allows businesses and developers to integrate OpenAI’s language and other purpose models within their solutions and ecosystems. Therefore, the service exposes the corresponding REST APIs to the current top-industry gpt-3.5-turbo, gpt-4, and embeddings model series.

Why Azure OpenAI?

Microsoft and OpenAI have joined forces to bring the advanced capabilities of OpenAI’s language models to the Azure cloud platform. The idea under the hood is straightforward — providing seamless access to the cutting-edge language processing capabilities of OpenAI without the complexities of managing the underlying infrastructure. So, the Azure OpenAI Service empowers the developers to focus on building innovative applications that leverage state-of-the-art natural language processing capabilities within the Microsoft Azure ecosystem and fall under their customer policies. For instance, one benefit of utilizing Azure OpenAI service is the data privacy and security itself — the prompts (inputs) and completions (outputs), embeddings, and customer business-tailored training data are not available or shared to OpenAI or used to improve any Microsoft or OpenAI models or 3rd party products or services. On the other hand, Azure is the native cloud environment for all developers working within the Microsoft ecosystem. I will not thoroughly discuss the differences between Microsoft Azure OpenAI and OpenAI services since they deserve a separate topic with two different perspectives and, as such, are beyond the scope of this article.

LLMs practical application

We are witnessing that there is currently a much more than exponential expansion of applications and startups creating solutions across various LLMs. In doing so, various industrial aspects and technical domains are affected, such as chat applications/chatbots for client care or customer support, agents for intelligent information search, digital assistants for generating content with different tones, vocabulary and target audience, summarization applications, translation modules, running various campaigns, generating and checking code quality, and many others. In this context, a prevalent approach is the so-called RAG, an AI-based approach for improving the quality of LLMs generated responses by grounding the model on external sources of knowledge to supplement the LLMs internal representation of information. What is happening behind the scenes is specializing the LLM to understand and respond, which is limited only to our target data, using its full cognitive and semantic potential for human-like understanding and reasoning. It also addresses and bypasses some of the current-generation LLMs limitations, like token limits, potential AI hallucinations and dealing with up-to-date or confidential knowledge. RAG is a compelling concept that deserves a separate dedicated article, and therefore I will cover the design and benefits of it as well as some other principles in more detail in one of the following posts.

Use case

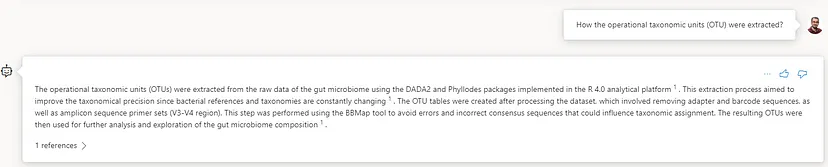

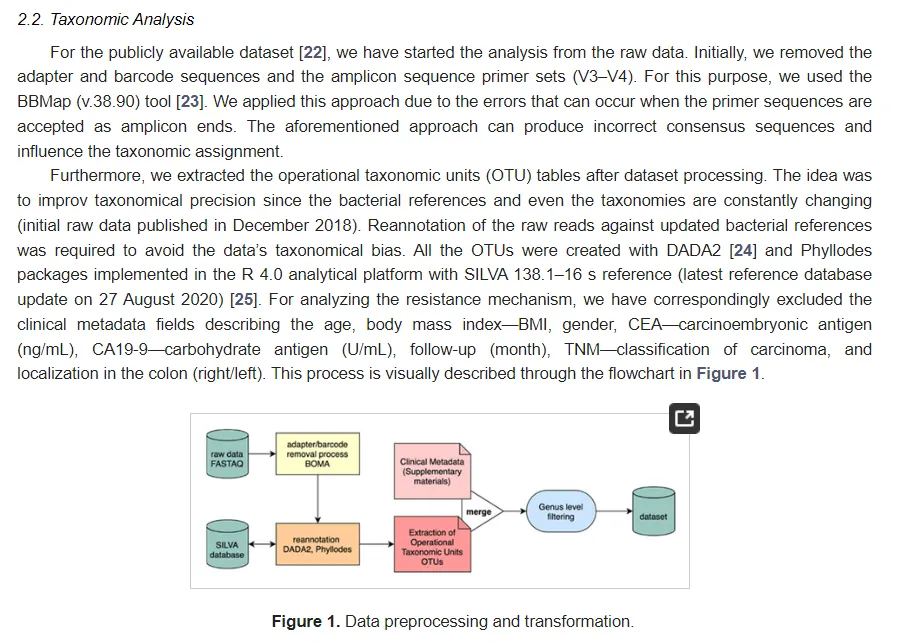

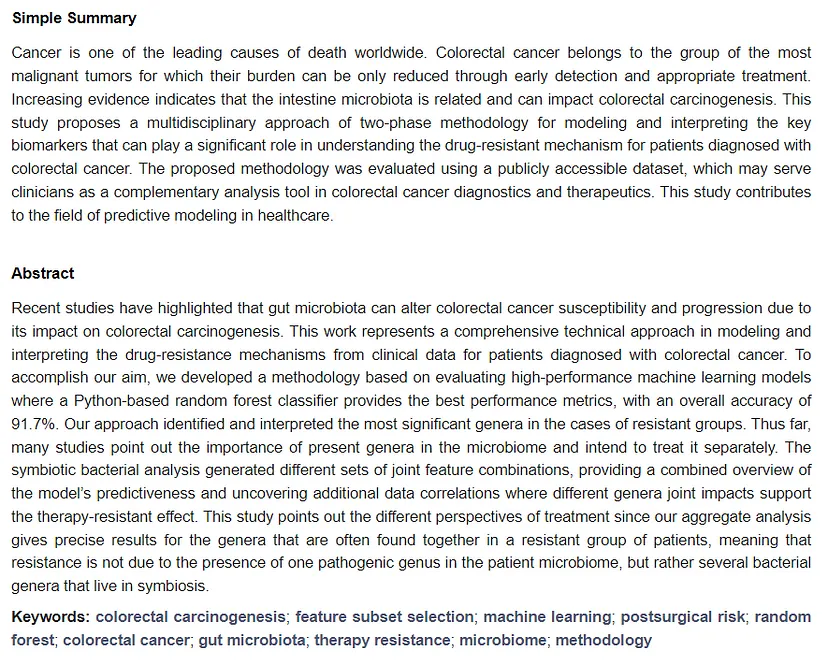

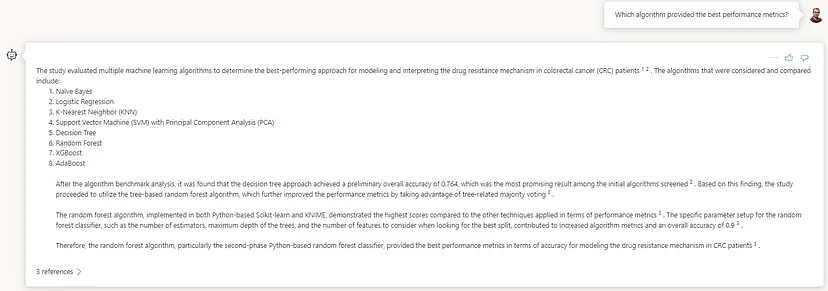

In addition to my professional career as a software engineer, I am building my interest and expertise in the academic field of bioengineering. More specifically, I am working on research on the design and implementation of a methodology to investigate CRC carcinogenesis and drug-resistance mechanisms by applying machine learning techniques to microbiome data. This bioinformatics field is probably still in its infancy and abounds with many publications of a technical and biological nature. The multidisciplinary character of the matter increases the level of complexity and interpretation, i.e., summarization or search of specific results related to some specific research points. In addition, there is currently no strictly defined de facto methodology for applying the particular research phases, making the process more sensitive to comparative analysis, review of previous knowledge and achievements, and current trends that yield promising results. To this end, in this article, I will present my approach to exploiting the potential of LLMs in search and summarize specific methods, approaches, and conclusions related to one of my publications in this field. I will do that by using the Azure OpenAI and its inbuilt RAG-based “Add your Data” preview feature officially announced on May 23rd this year.

Prerequisites

The only prerequirements for proceeding with the practical solution design are an active Microsoft Azure subscription and successfully completed and approved the request access to the Azure OpenAI Service, available via https://aka.ms/oai/access .

Technical approach

Without further ado, let’s move on to the main design and realization of the solution. As mentioned before, this will be a generalized approach and guidance of utilizing the Azure OpenAI service out of the box, with no specific configuration or advanced data processing techniques applied. The idea is to keep the default configuration settings and try the service out of the box.

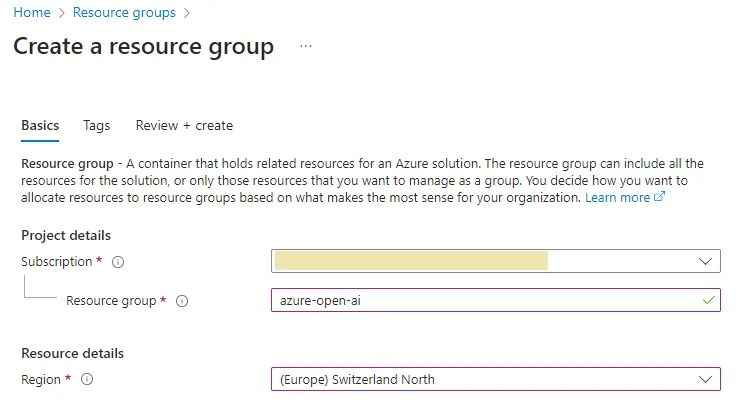

Let’s start by creating a dedicated resource group in which we will create and place all necessary resources. A resource group is basically a container or manageable group that holds logically related resources for specific Azure solution.

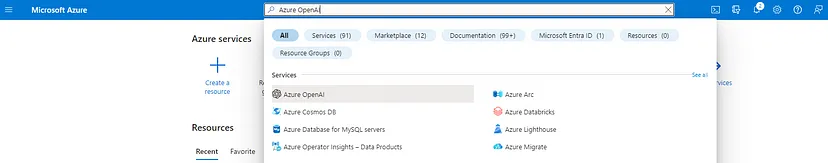

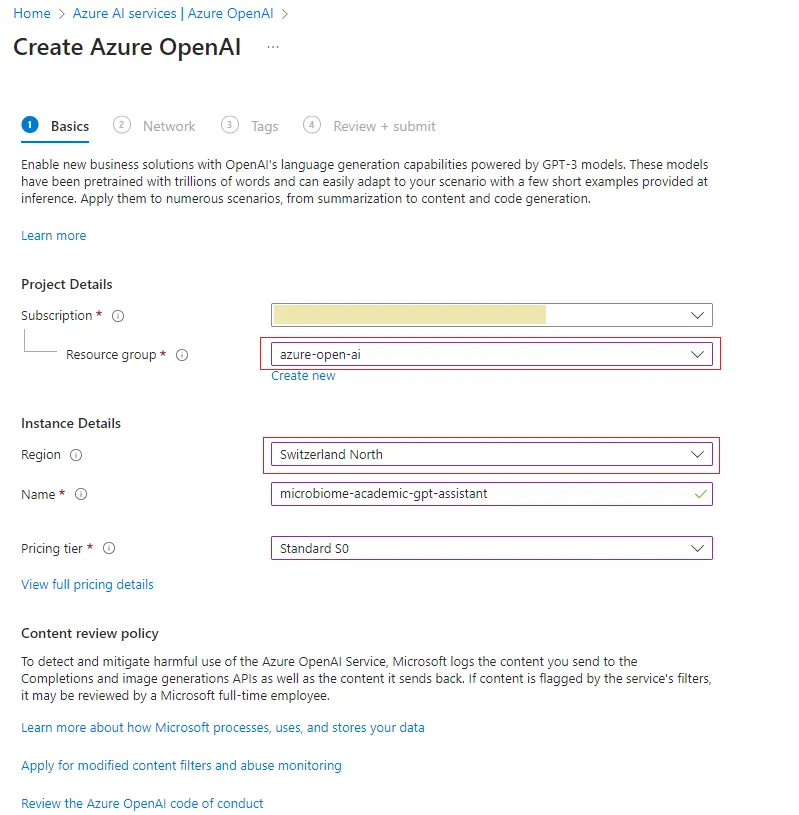

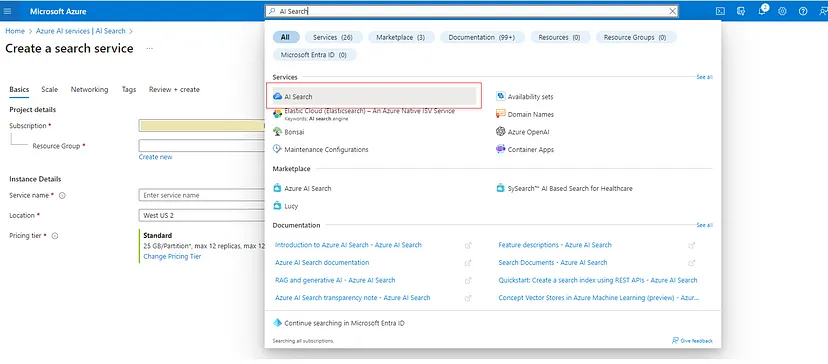

As stated before, the next logical step will be to search and create the Azure OpenAI instance.

We will create the instance in Switzerland North region as it is currently providing the basic and extended token versions of both gpt-3.5-turbo and gpt-4 models.

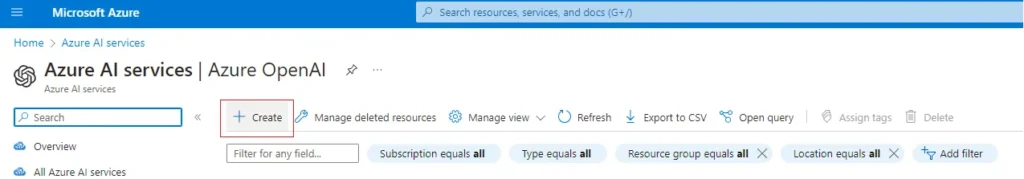

Аfter this service is ready, we can continue with the creation of a corresponding Azure OpenAI instance.

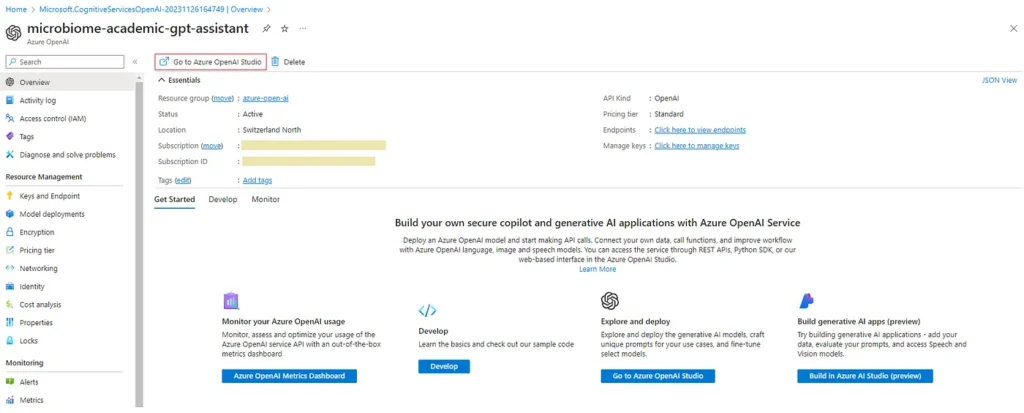

After completing the deployment we can access the Azure OpenAI instance overview page, where we have the direct link to the natively provided Azure OpenAI Studio.

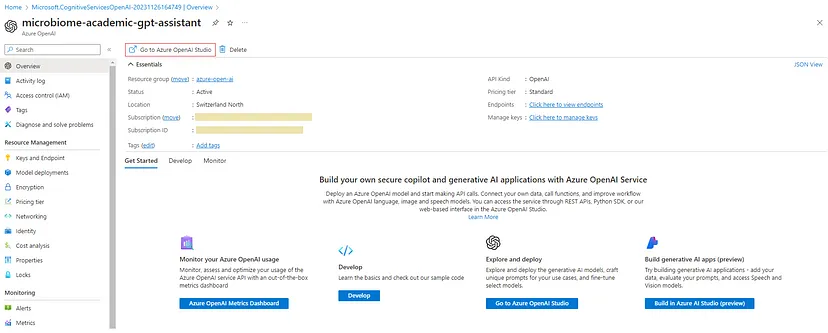

By following the link we will be directly redirected to the Azure OpenAI Studio overview page, where we are exposed and have access to different features, services and models.

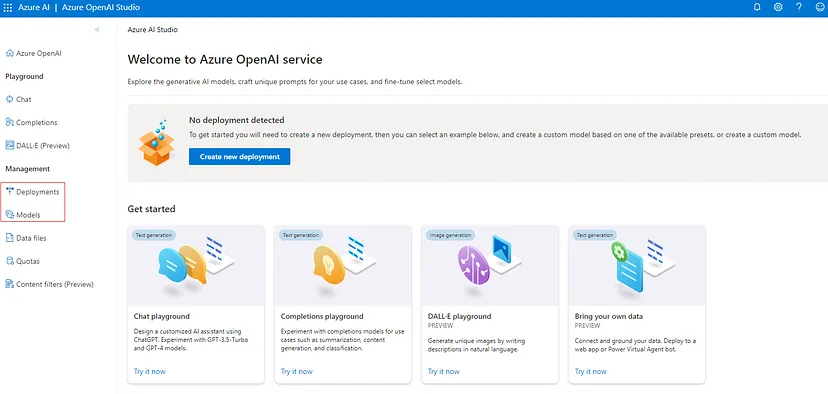

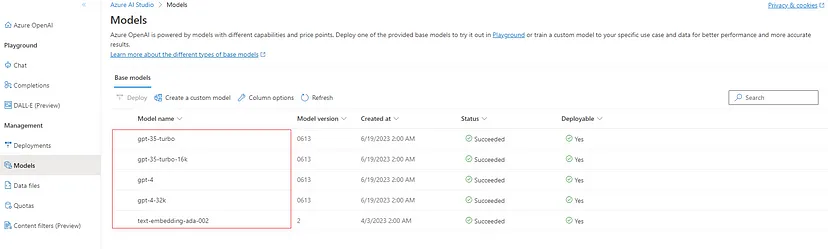

For example, we can check the ‘Models’ module where we can see all supported models within the selected Azure OpenAI instance (models availability depends on the region, for more information refer to the following page).

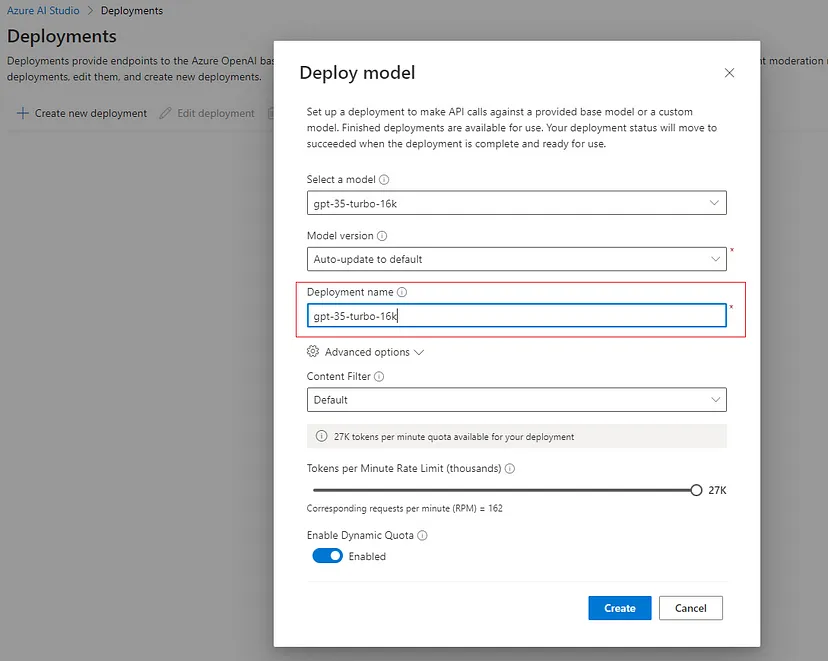

Next step will be to create a new deployment and select the model we will use for interaction. For the purpose of the demo, we will use the 16K token version of gpt-3.5-turbo model.

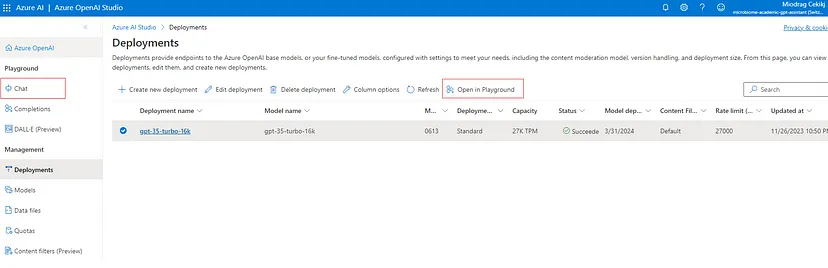

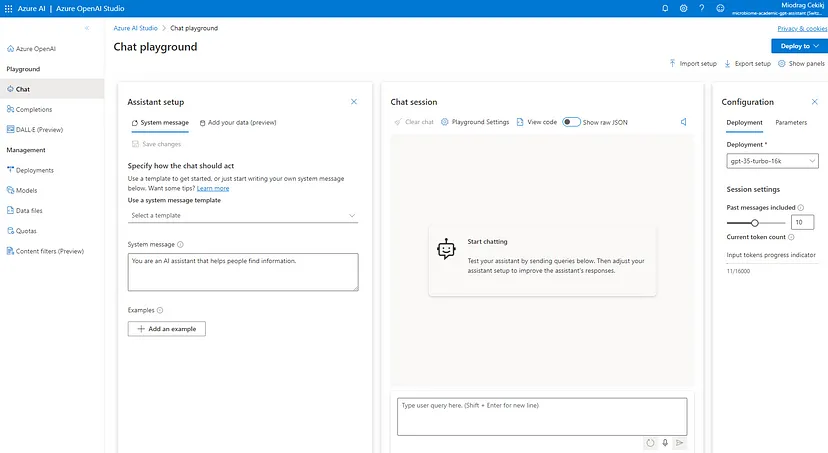

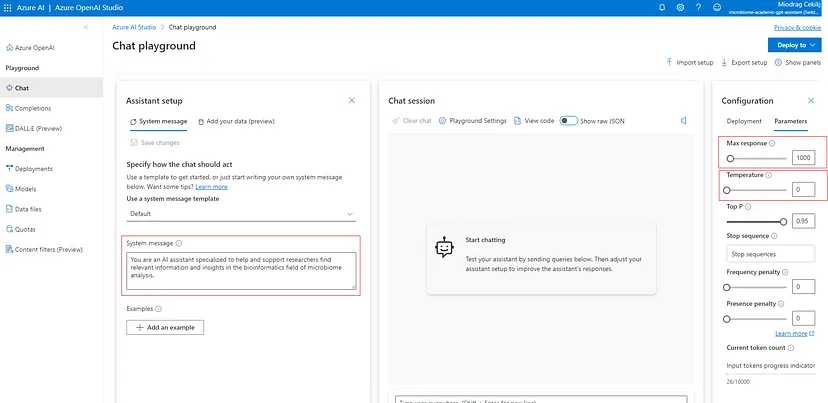

With that we are almost done, and we are ready to open the ‘Chat’ module, or Azure AI Studio Chat Playground. This is actually the main place where we will configure and interact with the selected model.

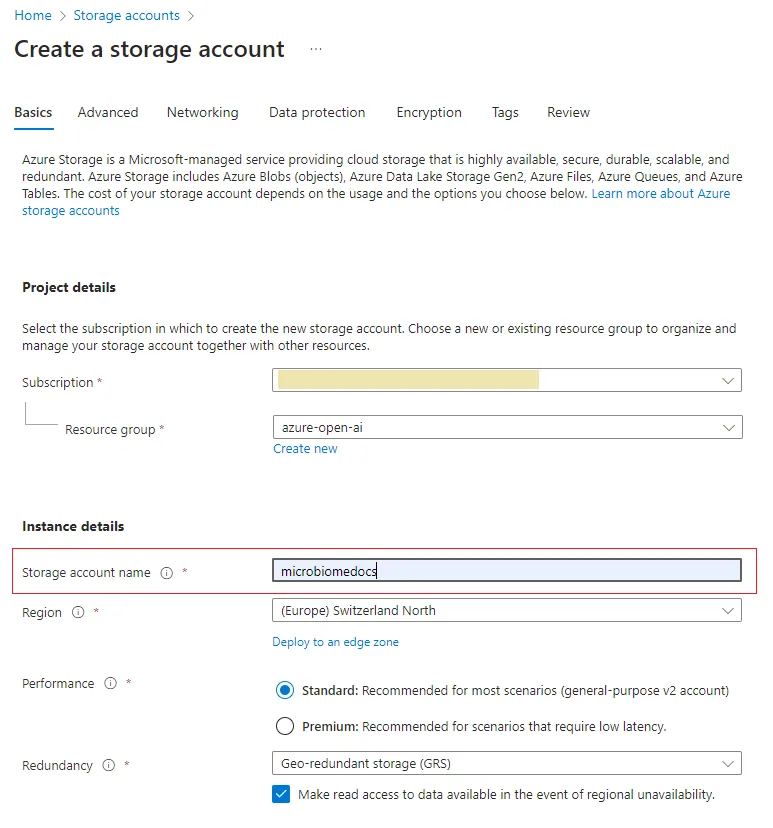

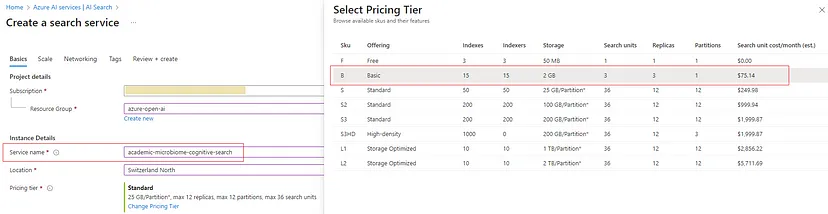

The only things left before showcasing the scenario are creating the Storage Account and the Cognitive Search (aka AI Search) resources. The cognitive search service is actually the core component when it comes to RAG powered application and I will prepare a separate article for elaborating it in more details.

Now we are ready to setup and proceed with the chat interaction. First we will modify the system message which will give the model instructions about how it should behave and any context it should reference when generating a response. Additionally, we will set the ‘Max response’ parameter to 1000, providing extended token context or enough space for model to express itself. The ‘Temperature’ will be set to 0, which generally means fully deterministic way of generating content. I will also cover all OpenAI’s model parameters in a separate article, where we will delve deeper into how to configure parameters depending on the data context and use-case scenario.

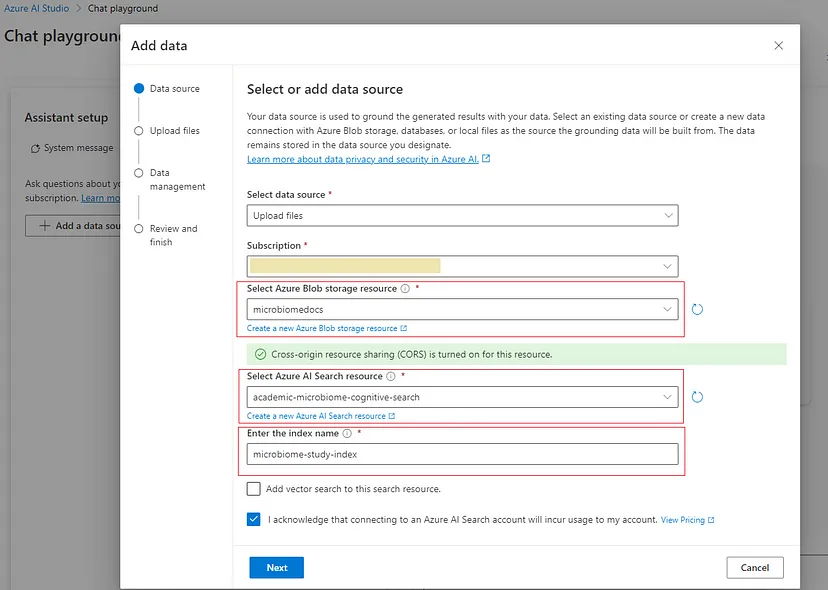

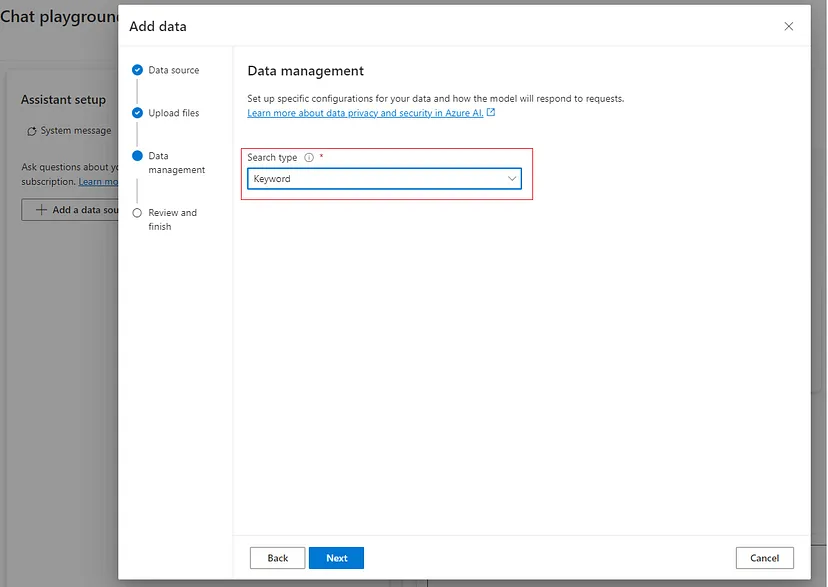

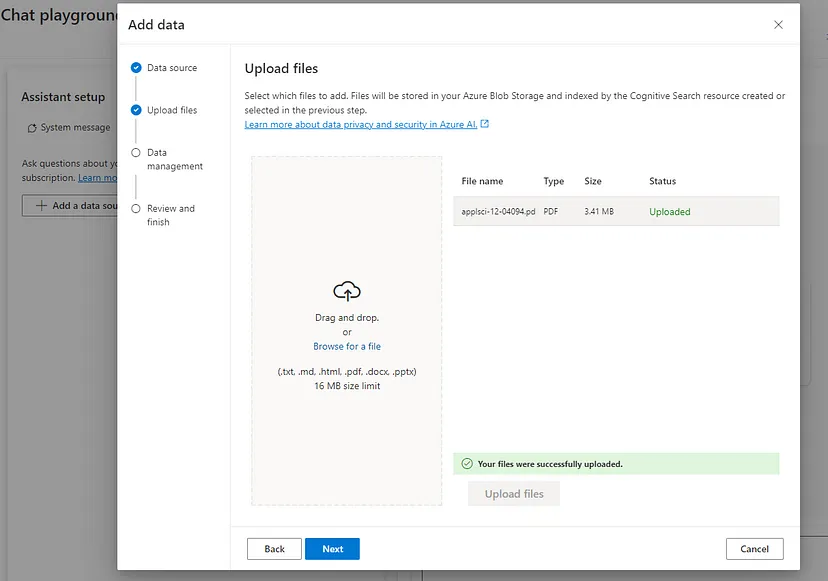

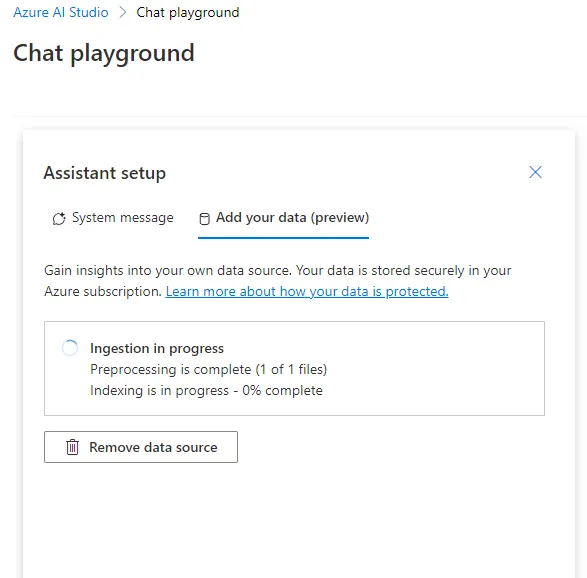

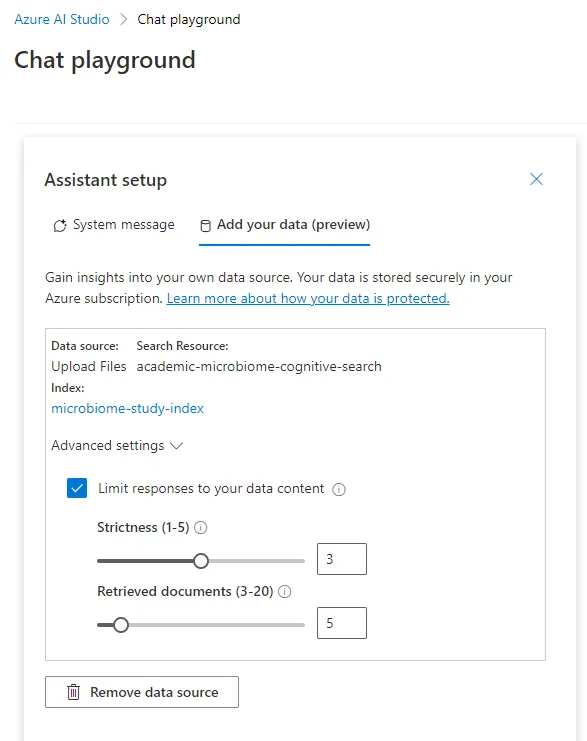

Then we will select the next very important tab ‘Add your data (preview)’. This is the place where we will import the downloaded PDF version of my Applied Science MDPI article ‘Understanding the Role of the Microbiome in Cancer Diagnostics and Therapeutics by Creating and Utilizing ML Models’, published in to the Section of Applied Biosciences and Bioengineering. It will be the only file that will be used for creating the index within the cognitive search instance.

* Note: If you are interested more about the research topic, then I have your back since I have already published summarized action points and other practical and scientific perspectives in a series of consecutive articles starting here.

I will also cover these parameters (‘Strictness’ and ‘Retrieved documents’) in more details and through concrete business tailored scenarios in one of the upcoming post within the series.

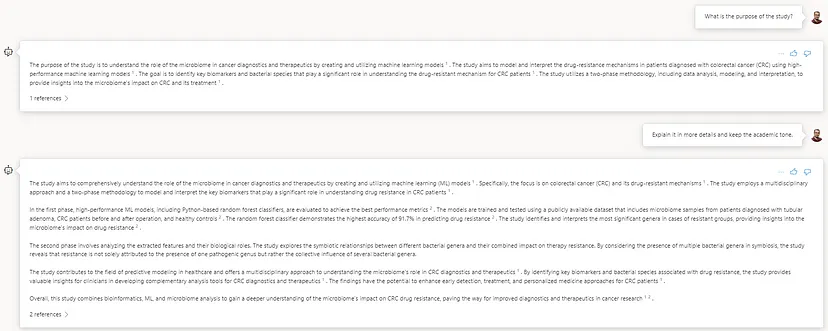

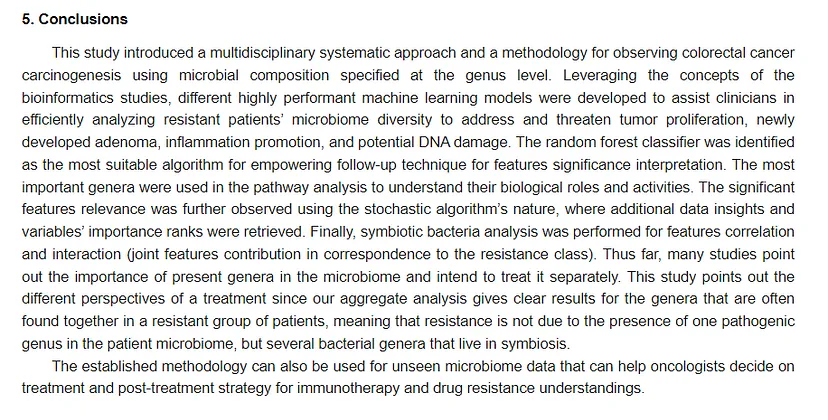

And, it is time to evaluate the digital assistant cognitive intelligence and specialization on the previously imported academic article.

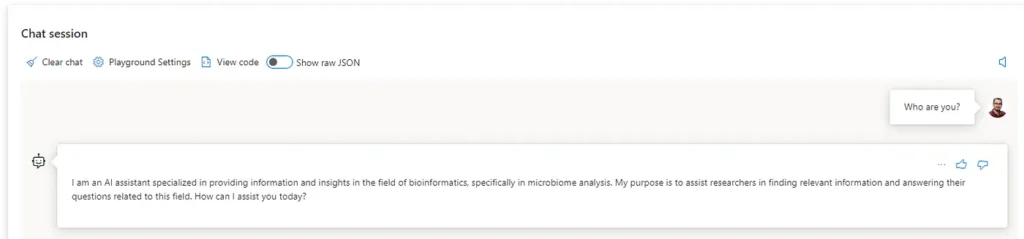

As usual, the first question reserved for doing the handshake and making the first contact by leveraging the previously defined system message.

Next, I will just present all different questions I asked followed by some of the content coming from the original article itself. That way you won’t have to have prior scientific domain knowledge of the area to determine if the results are relevant. However, I encourage you to read the study so that you can determine that the responses generated are drawn from the full content context.

Key Takeaways

We must agree that the results are pretty decent and promising, considering we are using Azure OpenAI in its default configuration without data processing or formatting. Someone might argue that it is only dealing with just one PDF file, but as mentioned before, this guide aims to showcase the general approach and the potential of the service itself. We will dive deeper into more complex scenarios in the upcoming articles, uncovering other features provided by each component accordingly.

On the other hand, it is worth mentioning the potential of using this approach in scientific purposes where the professionals can really benefit the information availability via human-like conversation and reasoning.

Thank you for taking the time to delve into my article. Your commitment to engaging with the content is genuinely appreciated, so feel free to place a comment or open a discussion about it. I appreciate your attention, curiosity, and open-mindedness and sincerely hope the information provided was valuable and enjoyable for you. Also, stay tuned, there will be much more about LLMs and related stuff around it.

In the next article we will continue with a general overview of the functionalities currently offered by the Azure OpenAI Studio, explicitly covering the Chat Playground part.