Analyzing images and video content by leveraging the power of Natural Language Processing and Visual Understanding in Azure OpenAI

T-4 Turbo with Vision on Azure OpenAI is now officially available in public preview. But what is GPT-4 Turbo with Vision (GPT-4V)?

GPT-4 Turbo with Vision

GPT-4 Turbo with Vision is a Large Multimodal Model (LMM) developed by OpenAI that can analyze graphical content and provide textual responses to questions about it. It can digest the information in multiple “modalities”, such as text and images or text and audio, and generate answers based on the content. In general, the GPT-4 Turbo with Vision is built upon the existing capabilities of the GPT-4 turbo model, which, besides the enriched token frame and more comprehensive and practical reasoning over textual data, also offers a visual analysis now.

The core concepts and critical capabilities of the GPT-4V model are:

- Visual Data Analysis – the ability to interpret and analyze data presented in graphical formats such as graphs, charts, and other visualizations.

- Object Detection – the ability to recognize or identify different shapes and objects within the images.

- Text deciphering – the ability to understand and interpret handwritten notes and documents.

Sounds really cool, so let`s give it a try!

GPT-4V Azure OpenAI Setup

Currently, the GPT-4V model is available across the following Azure regions: SwitzerlandNorth, SwedenCentral, WestUS, and AustraliaEast. We can use any of them to create a deployment and load the model in the Chat Playground.

* Chat Completion API is also available here.

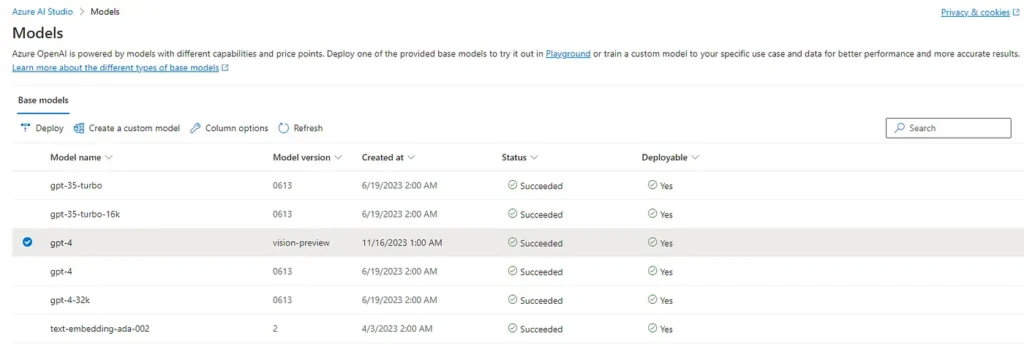

GPT-4 Turbo with Vision model availability in Azure AI Studio

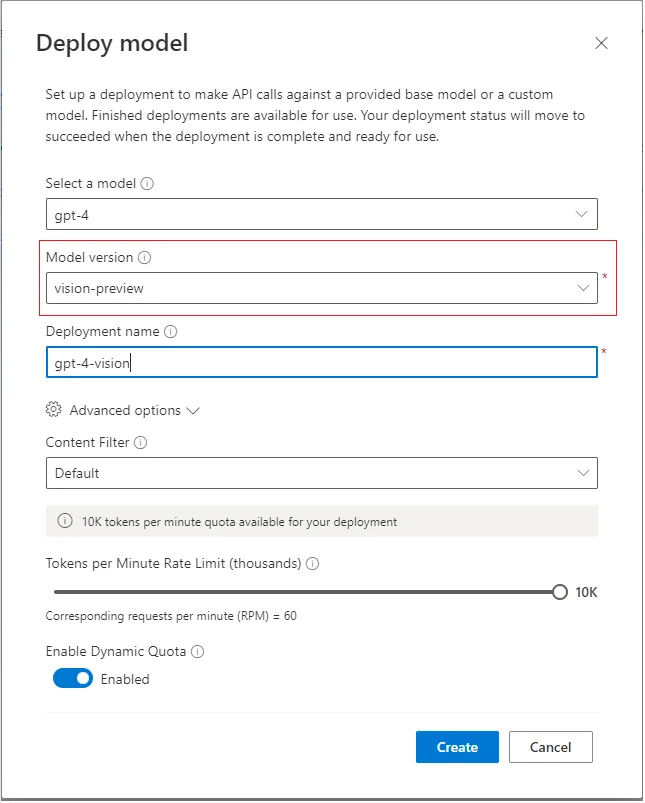

To access the vision version of the model, we need to create a separate GPT-4 deployment with the ‘vision-preview’ model version.

Deploying GPT-4 model with ‘vision-preview’ version

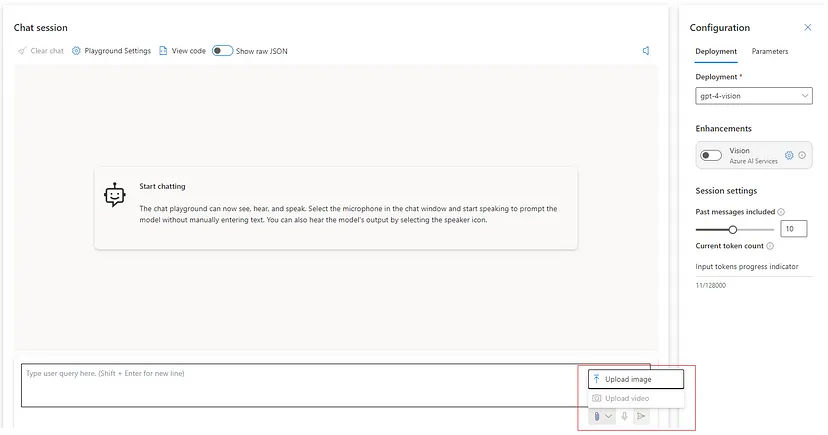

After the deployment is successfully published, we can see and select the model in the Configuration panel of the Chat Playground section. The usual text-based interface is now enriched with an attachment button where we can upload image or video content.

Azure AI Studio Chat Playground – attachment button for uploading image or video content

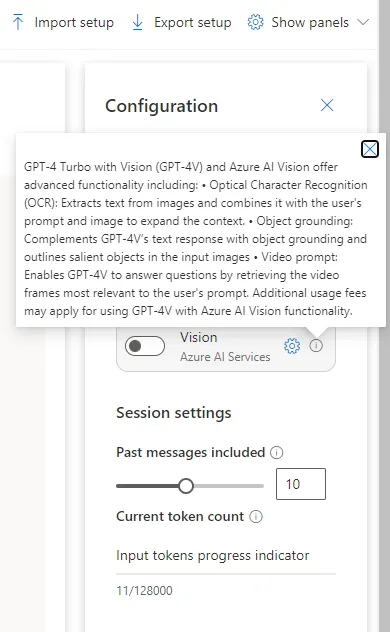

Combined with Azure AI Services configuration, it enhances our experience by introducing an array of advanced functionalities, like optical character recognition extraction, object grounding, and video prompting.

* Note: As stated in the popup, we will need to create a specific Computer Vision resource to enable this enhancement functionality.

Enabling and configuring Azure AI Services for enhanced vision capabilities

GPT-4V Use Cases

Let`s upload some images and get the first impression of the model`s capabilities through some basic prompting.

1. Visual Data Analysis

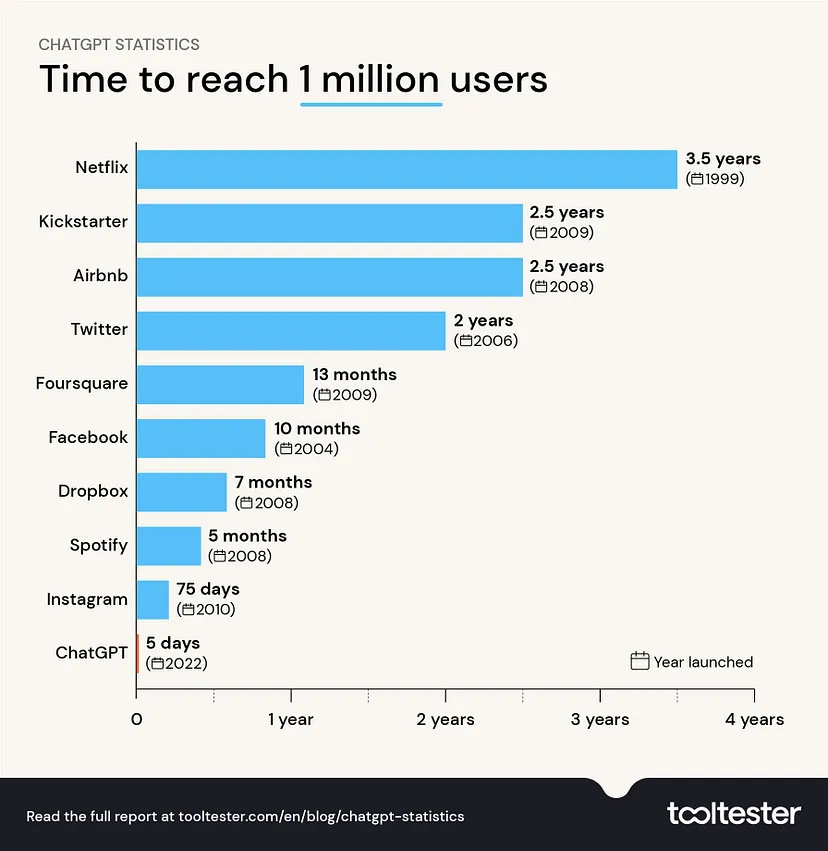

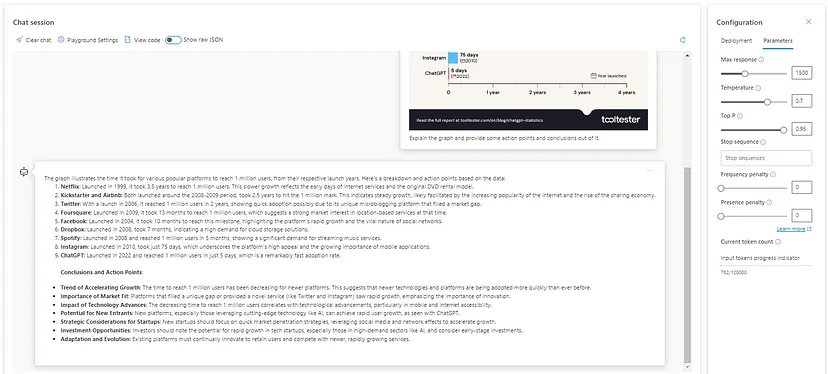

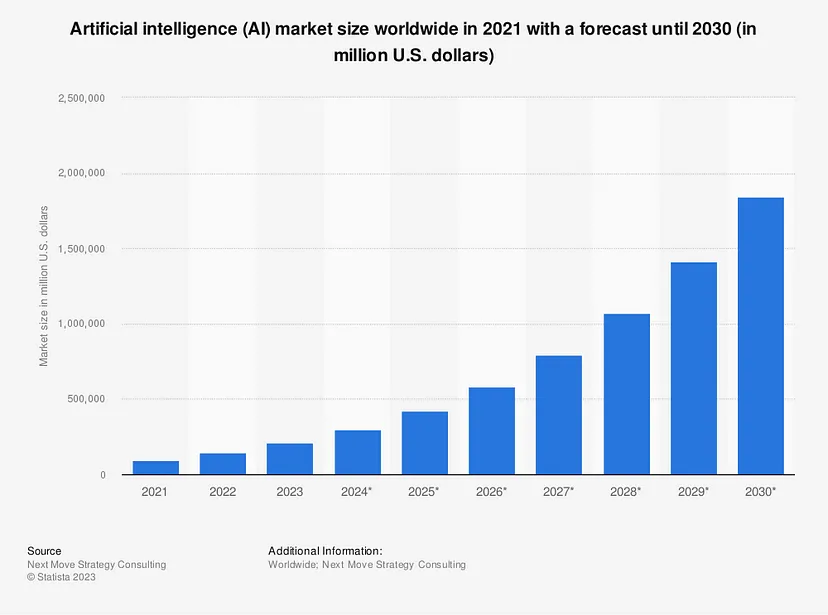

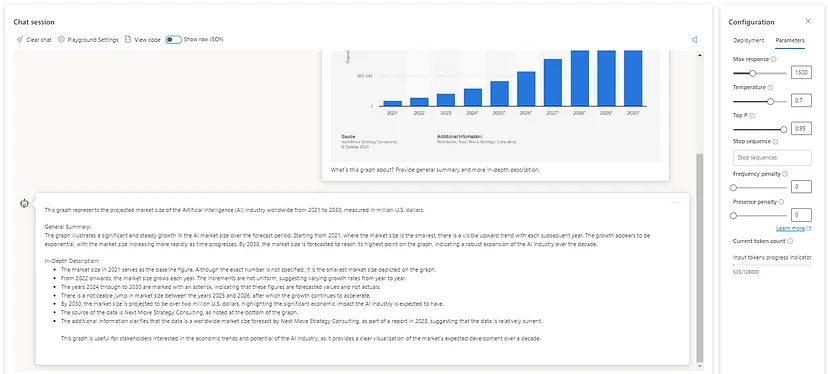

Let`s take some publicly available charts for different statistics: Time to market for some applications to reach 1 million users and Artificial intelligence market size worldwide in 2021 with a forecast until 2030.

Time to reach 1 million users; Source: https://www.tooltester.com/en/blog/chatgpt-statistics/

Visual Data Analysis – analyzing the ‘Time to reach 1 million users’ statistics

Artificial intelligence (AI) market size worldwide in 2021 with a forecast until 2030 (in million U.S. dollars); Source: https://www.statista.com/statistics/1365145/artificial-intelligence-market-size/

Visual Data Analysis – analyzing the ‘Artificial intelligence market size worldwide in 2021 with a forecast until 2030’ statistics

2. Object Detection

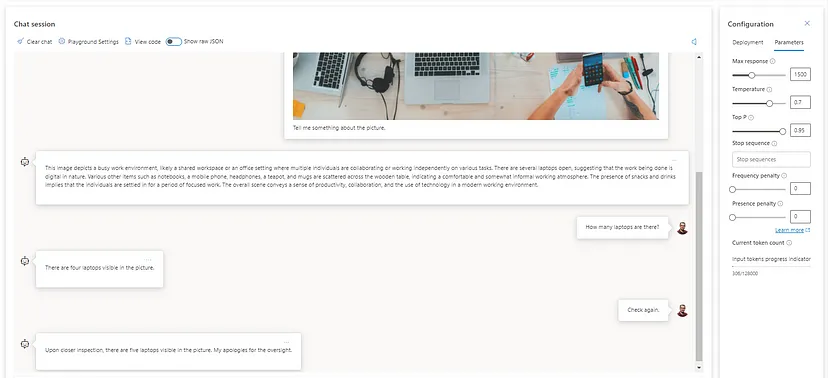

Let`s go ahead and challenge the model to explain and even try to count some objects for the following image.

Photo by Marvin Meyer on Unsplash

Object Detection – Identifying the general image context and counting specific objects

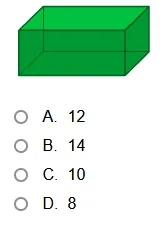

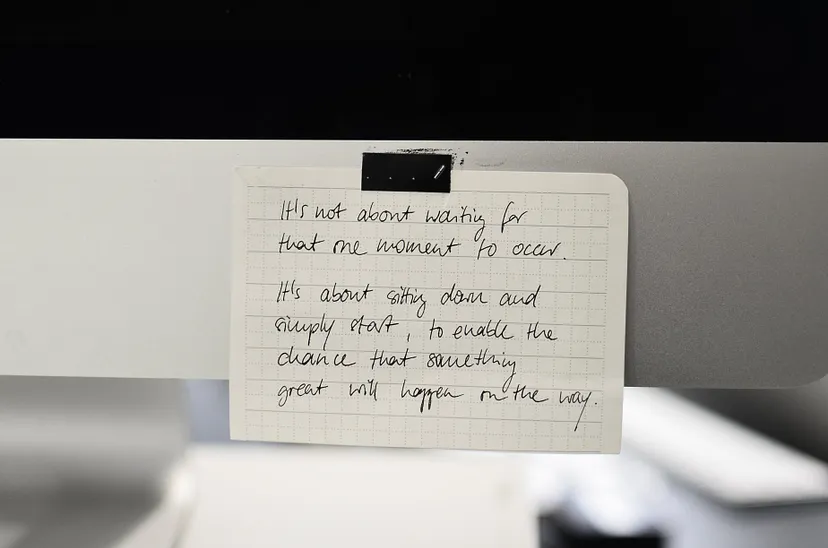

Another gripping case will be to prompt a math (geometry) based multiple-choice question and try to get the correct answer along with the explanation about it.

Turtle Diary Geometry Quiz; Source: https://www.turtlediary.com/

Image by Author – Object detection – Solving geometry multi-choice quiz question

3. Text deciphering

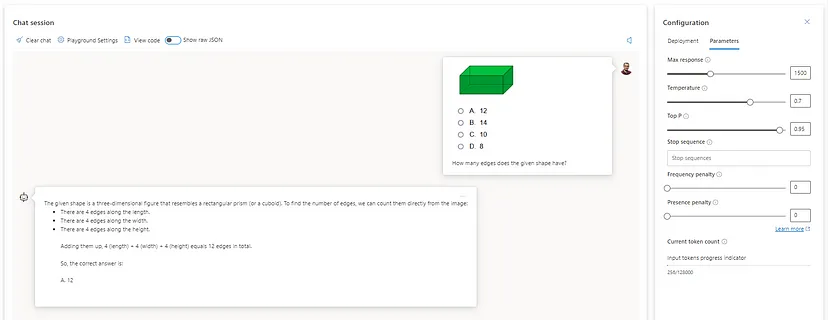

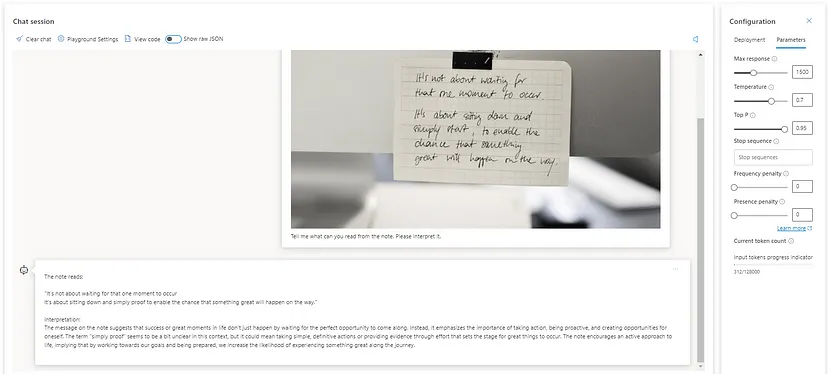

How about understanding and interpreting hand-written notes or documents? Let`s evaluate the model`s potential for recognizing and explaining the following content.

Photo by Skylar Kang from Pexels

Text deciphering – understanding and interpreting hand-written notes

Wrap Up Notes

GPT-4 Turbo with Vision is created on top of the GPT-4 model. It falls under the umbrella of large multi-modal models, which can analyze and reason over graphical content and provide textual responses related to it.

The spectrum of possibilities for applying this revolutionary approach is vast. It covers several areas, such as software engineering & development, data analysis & engineering, content generation & interpretation, scientific research, etc. These are all fields that target a lot of different industries and practices.

It is also worth mentioning that the GPT-4V is generally restricted to risky or potentially offensive tasks and will refuse to process and generate insights about anything beyond the Responsible AI and Privacy policies. Microsoft is committed to advancing AI driven by responsible principles simultaneously. Also, Azure OpenAI offers cutting-edge AI capabilities within enterprise-grade security, meaning that data privacy and contextual data integrity are guaranteed.