In cloud infrastructure management, maintaining control and consistency across deployments is critical. Terraform, an Infrastructure as Code (IaC) tool, has become essential for automating and managing cloud infrastructure. Terraform‘s most potent feature is the ability to generate reusable and modular components known as modules. In this comprehensive guide, we’ll look at the nuances of Terraform modules, focusing on their use in deploying a backend configuration with Azure services such as API Management, Logic Apps and Service Bus.

Prerequisites

- A valid Azure subscription

- Azure Remote Backend for Terraform

- A code editor like VS Code

- Version control systems like Azure DevOps, GitHub, etc.

Using Modules in Terraform for Infrastructure Management

As we start creating robust infrastructure with Terraform, the complexity of our architectures will inevitably increase. As our code grows, it becomes increasingly important to maintain clarity, organization, and reusability. This is where the strategic use of modules stands out.

At the start of our Terraform projects, we frequently created numerous resources, designed elaborate architectures, and spread our code across multiple files. However, managing this sprawling codebase becomes more complex as our projects expand. This is precisely where modules come in to help alleviate the strain.

Modules allow us to group related resources logically instead of lumping them together. This modular approach improves our code’s organization while simplifying maintenance tasks. Consider being able to deploy or update a set of resources with a single command, rather than having to sift through countless files.

Furthermore, in large organizations with multiple teams working on similar architectures, the advantages of modules become even more apparent. Copying and pasting code blocks may be a quick fix, but it almost always results in a tangled web of dependencies and headaches during updates. Modules provide a cleaner, more efficient solution by abstracting standard resource configurations, promoting consistency, and facilitating collaboration.

However, it is essential to note that not all resources require their own module. Modules should represent logical groups of resources that are deployed or managed together. This keeps our modules focused, reusable, and maintainable over time.

In essence, modules are the foundation for structuring Terraform code, encouraging reusability, maintainability, and consistency across projects and teams. By strategically incorporating modules into our infrastructure development workflows, we can easily navigate the complexities of modern cloud environments.

Creating APIM, Logic App, and Service Bus with Terraform Modules

Let’s get practical with Terraform modules by deploying Azure API Management (APIM), Logic Apps, and Service Bus. We’ll demonstrate how to use modules to maintain clarity, organization, and reusability in infrastructure code.

Module Structure

Before we begin, let’s define the directory structure for our Terraform project:

├── modules

│ ├── apim

│ │ ├── main.tf

│ │ ├── variables.tf

│ │ └── outputs.tf

│ ├── logic_app

│ │ ├── main.tf

│ │ ├── variables.tf

│ │ └── outputs.tf

│ └── service_bus

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

├── main.tf

├── variables.tf

└── outputs.tf

This structure has separate modules for APIM, Logic App, and Service Bus, each containing main.tf, variables.tf, and outputs.tf files.

APIM Module

Let’s start with the APIM module. Create the following files in the modules/apim directory:

modules/apim/main.tf

# Define the Azure API Management resource

resource "azurerm_api_management" "example" {

name = var.apim_name

location = var.location

resource_group_name = var.resource_group_name

publisher_name = var.publisher_name

publisher_email = var.publisher_email

sku_name = var.apim_sku

}

modules/apim/variables.tf

variable "apim_name" {

description = "Name of the Azure API Management service"

}

variable "location" {

description = "Azure region for the API Management service"

}

variable "resource_group_name" {

description = "Name of the Azure resource group"

}

variable "publisher_name" {

description = "Name of the publisher for the API Management service"

}

variable "publisher_email" {

description = "Email of the publisher for the API Management service"

}

variable "apim_sku" {

description = "SKU for the API Management service"

}

modules/apim/outputs.tf

output "apim_id" {

description = "ID of the created API Management service"

value = azurerm_api_management.example.id

}

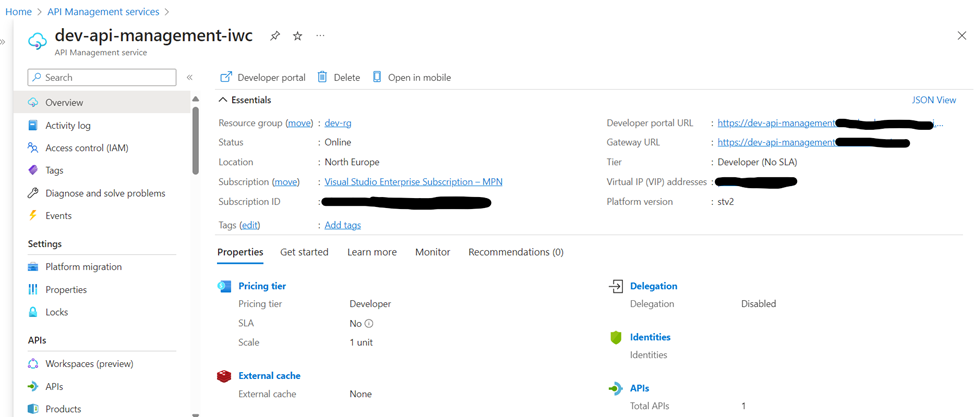

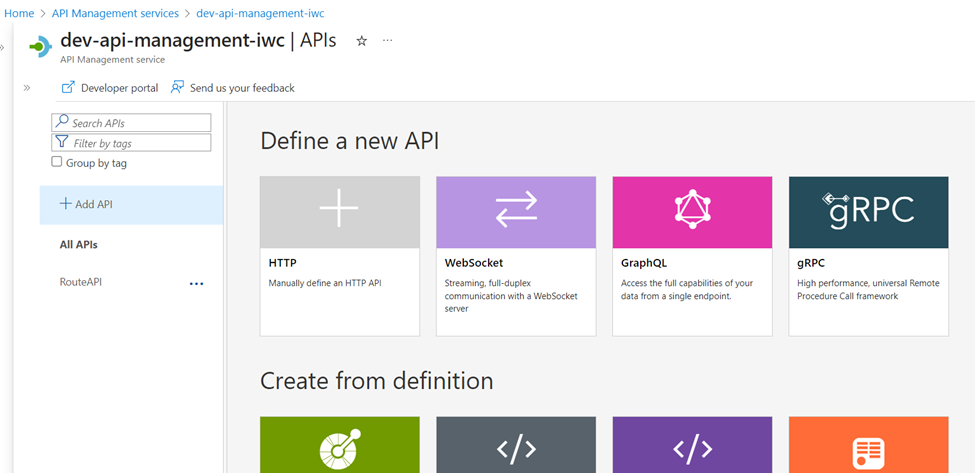

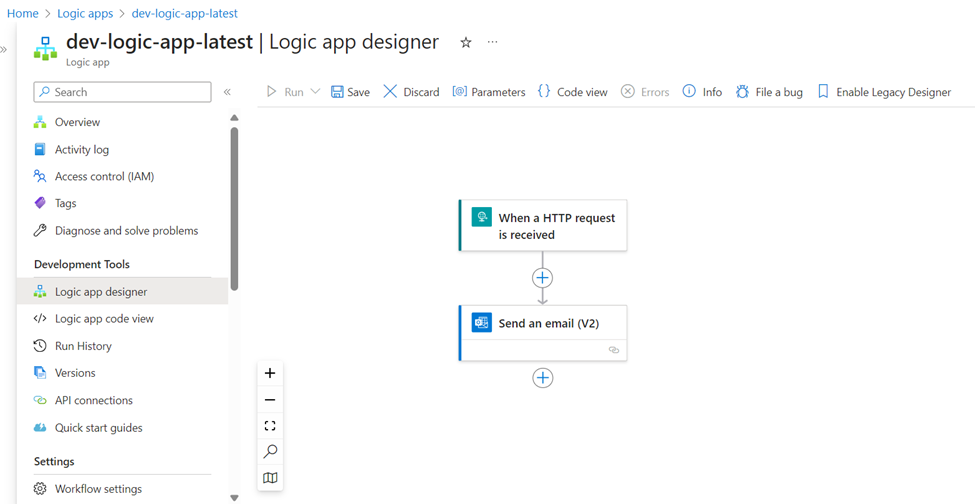

Overview of the created resource:

Logic App Module

Following that, let’s configure the Logic App module. Create similar files in the modules/logic_app directory.

modules/logic_app/main.tf

# Define the Azure Logic App resource

resource "azurerm_logic_app_workflow" "example" {

name = var.logic_app_name

location = var.location

resource_group_name = var.resource_group_name

definition = <<DEFINITION

{

"definition": {

"$schema": "https://schema.management.azure.com/providers/Microsoft.Logic/schemas/2016-06-01/workflowdefinition.json#",

...

}

}

DEFINITION

}

# Define the Logic App trigger and actions

# Modify the "definition" section with your Logic App workflow definition

modules/logic_app/variables.tf

variable "logic_app_name" {

description = "Name of the Azure Logic App"

}

variable "location" {

description = "Azure region for the Logic App"

}

variable "resource_group_name" {

description = "Name of the Azure resource group"

}

# Define additional variables as needed for your Logic App configuration

modules/logic_app/outputs.tf

output "logic_app_id" {

description = "ID of the created Logic App"

value = azurerm_logic_app_workflow.example.id

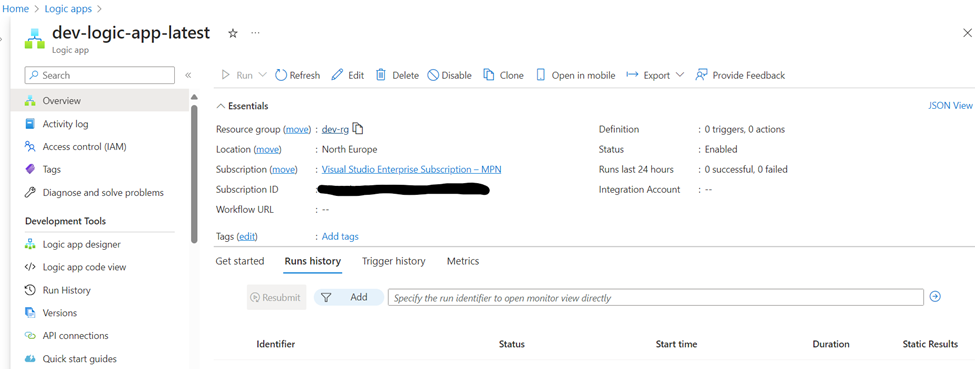

}Overview of the created resource:

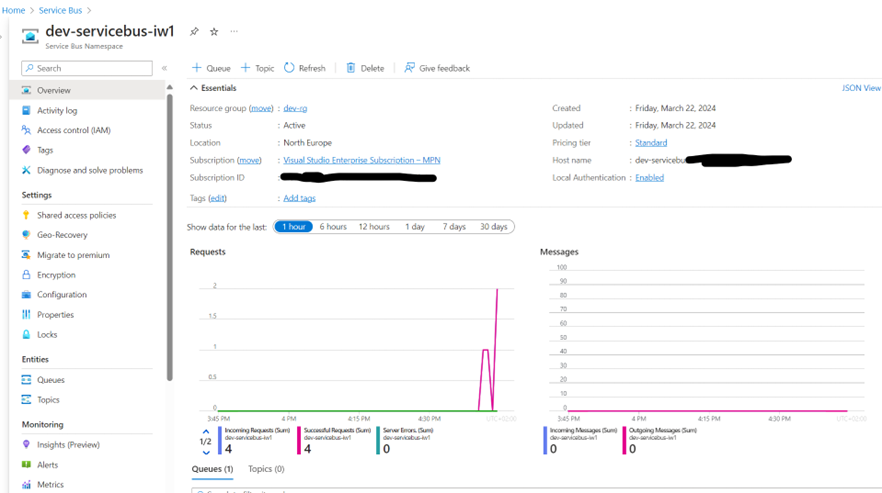

Service Bus Module

Lastly, let’s configure the Service Bus module. Create the following files in the modules/service_bus directory:

modules/service_bus/main.tf

# Define the Azure Service Bus namespace

resource "azurerm_servicebus_namespace" "example" {

name = var.service_bus_namespace_name

location = var.location

resource_group_name = var.resource_group_name

sku = var.service_bus_sku

}

# Define Service Bus topics, queues, subscriptions, etc.

# Modify as per your Service Bus requirements

modules/service_bus/variables.tf

variable "service_bus_namespace_name" {

description = "Name of the Azure Service Bus namespace"

}

variable "location" {

description = "Azure region for the Service Bus namespace"

}

variable "resource_group_name" {

description = "Name of the Azure resource group"

}

variable "service_bus_sku" {

description = "SKU for the Service Bus namespace"

}

# Define additional variables as needed for your Service Bus configuration

modules/service_bus/outputs.tf

output "service_bus_namespace_id" {

description = "ID of the created Service Bus namespace"

value = azurerm_servicebus_namespace.example.id

}

Root module definition

With this modular structure in place, our root main.tf script orchestrates the seamless and efficient deployment of Azure resources, as shown below:

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~> 3.0"

}

}

}

provider "azurerm" {

features {}

}

module "resource_group" {

source = "./modules/resource-group"

resource_group = var.resource_group

location = var.location

tags = {

environment = var.environment

}

}

module "apim" {

source = "./modules/apim"

apim_name = var.apim_name

location = module.resource_group.location

resource_group_name = module.resource_group.resource_group

publisher_name = var.publisher_name

publisher_email = var.publisher_email

apim_sku = var.apim_sku

}

module "logic_app" {

source = "./modules/logic_app"

logic_app_name = var.logic_app_name

location = module.resource_group.location

resource_group_name = module.resource_group.resource_group

}

module "service_bus" {

source = "./modules/service_bus"

service_bus_namespace_name = var.service_bus_namespace_name

location = module.resource_group.location

resource_group_name = module.resource_group.resource_group

service_bus_sku = var.service_bus_sku

}

Setting Up the Terraform Backend Configuration

In the context of Terraform infrastructure management, backend configuration is critical. Essentially, the backend determines how Terraform manages its state, which in turn dictates the actions that will be performed on resources during execution, such as creation, modification, or deletion.

While Terraform typically defaults to a local backend, which is ideal for individual development, it is critical to take a more collaborative approach in professional environments. Storing Terraform state remotely in collaborative environments is essential for smooth team collaboration and consistency across deployments.

Azure Storage is an excellent choice for storing the Terraform state due to its dependability and accessibility. Configuring the backend consists of two fundamental steps:

Provisioning Azure Storage Resources

To kickstart this process, we’ll deploy the necessary Azure resources using the following commands, through the regular command prompt with Azure CLI:

:: Azure Login

az login

:: Check if you are logged in

az account show

:: Set variables

set RESOURCE_GROUP_NAME=tf-terraform-state

set LOCATION=eastus

set STORAGE_ACCOUNT_NAME=terraformstate%random%

set CONTAINER_NAME=tfstate

:: Create resource group

az group create --name %RESOURCE_GROUP_NAME% --location %LOCATION%

:: Create storage account

az storage account create --resource-group %RESOURCE_GROUP_NAME% --name %STORAGE_ACCOUNT_NAME% --sku Standard_LRS --encryption-services blob

:: Retrieve storage account key

for /f "delims=" %%i in ('az storage account keys list --resource-group %RESOURCE_GROUP_NAME% --account-name %STORAGE_ACCOUNT_NAME% --query [0].value -o tsv') do set ACCOUNT_KEY=%%i

:: Create blob container

az storage container create --name %CONTAINER_NAME% --account-name %STORAGE_ACCOUNT_NAME% --account-key %ACCOUNT_KEY%

:: Display information

@echo Storage Account Name: %STORAGE_ACCOUNT_NAME%

@echo Container Name: %CONTAINER_NAME%

@echo Access Key: %ACCOUNT_KEY%

This creates a resource group, a storage account, and a blob container under the Azure Storage account. We’re also putting these resources in their own resource group to enforce better organization and management practices, ensuring that the Terraform state file is isolated from the infrastructure components. This separation reduces the likelihood of unintended dependencies while simplifying access control and monitoring procedures.

Configuring Terraform Backend

With the Azure Storage resources in place, we integrate Terraform with the backend via the backend.tf file:

terraform {

backend "azurerm" {

resource_group_name = "tf-terraform-state"

storage_account_name = "terraformstate" // Replace with your storage account name

container_name = "tfstate"

key = "terraform.tfstate"

}

}

Terraform is instructed to use Azure Storage as its backend for storing state in this configuration. The provided parameters, which include resource_group_name, storage_account_name, container_name, and key, specify the location and naming conventions of the Terraform state file.

Initializing Terraform

Once the backend has been configured, run the following command to initialize Terraform:

terraform initThis command sets up Terraform and configures it to use the specified backend. Upon execution, Terraform initializes any modules and downloads any plugins required for the current configuration.

Implementing our Infrastructure

To create our resources, we run the commands listed below. After running the command apply, we can view them through the Azure portal.

terraform init

terraform plan

terraform apply

Terraform will perform the following actions:

# Module: modules/apim

+ azurerm_api_management.apim

id: <computed>

name: "example-apim"

location: "eastus"

...

# Module: modules/logic_app

+ azurerm_logic_app_workflow.logic_app

id: <computed>

name: "example-logic-app"

location: "westus"

...

# Module: modules/service_bus

+ azurerm_servicebus_namespace.service_bus

id: <computed>

name: "example-service-bus"

location: "centralus"

...

azurerm_api_management.apim: Creating...

azurerm_logic_app_workflow.logic_app: Creating...

azurerm_servicebus_namespace.service_bus: Creating...

azurerm_servicebus_namespace.service_bus: Creation complete after 30s (ID: /subscriptions/.../resourceGroups/.../providers/Microsoft.ServiceBus/namespaces/example-service-bus)

azurerm_logic_app_workflow.logic_app: Creation complete after 45s (ID: /subscriptions/.../resourceGroups/.../providers/Microsoft.Logic/workflows/example-logic-app)

azurerm_api_management.apim: Creation complete after 2m35s (ID: /subscriptions/.../resourceGroups/.../providers/Microsoft.ApiManagement/service/example-apim)

Apply complete! Resources: 3 added, 0 changed, 0 destroyed.

Destroying the Infrastructure

We use the following command to destroy our previous infrastructure and cleanup the resources:

terraform destroy

Terraform will perform the following actions:

# Module: modules/apim

- azurerm_api_management.apim

id: /subscriptions/.../resourceGroups/.../providers/Microsoft.ApiManagement/service/example-apim

name: "example-apim"

location: "eastus"

...

# Module: modules/logic_app

- azurerm_logic_app_workflow.logic_app

id: /subscriptions/.../resourceGroups/.../providers/Microsoft.Logic/workflows/example-logic-app

name: "example-logic-app"

location: "westus"

...

# Module: modules/service_bus

- azurerm_servicebus_namespace.service_bus

id: /subscriptions/.../resourceGroups/.../providers/Microsoft.ServiceBus/namespaces/example-service-bus

name: "example-service-bus"

location: "centralus"

...

azurerm_api_management.apim: Destroying...

azurerm_logic_app_workflow.logic_app: Destroying...

azurerm_servicebus_namespace.service_bus: Destroying...

azurerm_logic_app_workflow.logic_app: Destruction complete after 10s

azurerm_servicebus_namespace.service_bus: Destruction complete after 15s

azurerm_api_management.apim: Destruction complete after 2m45s

Destroy complete! Resources: 3 destroyed.

Monitoring Infrastructure using Azure Log Analytics

Ensuring the health and performance of our Azure infrastructure is critical. We use Azure Log Analytics to transform data from various sources into actionable insights. Look at it: our cloud detective, piecing together clues from applications, servers, and virtual machines to identify performance patterns, security issues, and overall system health.

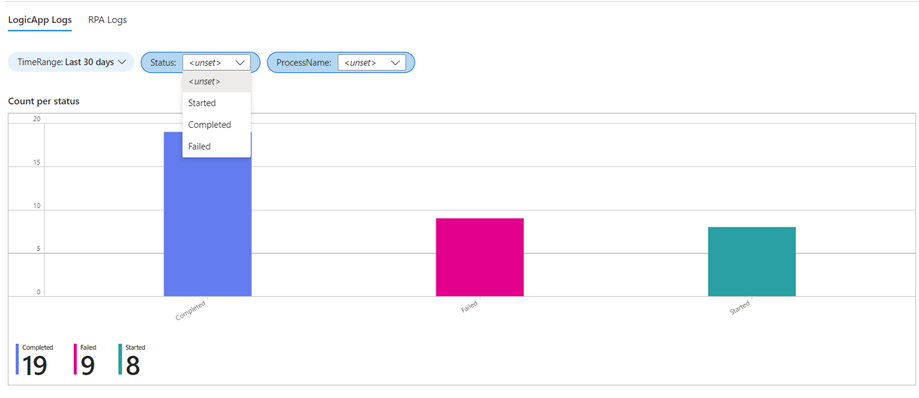

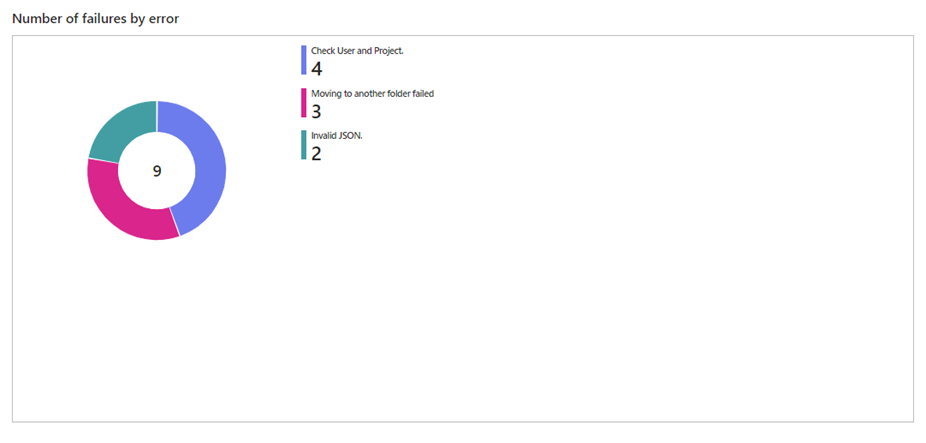

Log Analytics can proactively detect anomalies, track trends, and predict potential issues before they affect our operations. Let’s look at a few examples of how we monitor our newly created infrastructure with Log Analytics dashboards.

- Execution Monitor: With a simple yet powerful dashboard, we can visualize the number of executions within a selected time frame, sorted by status. This allows us to quickly identify patterns and spot any anomalies in the execution process. We can filter by status and process name, providing granular control over our monitoring:

- Failed Executions: This dashboard focuses on failed executions, categorizing them according to the reasons for failure. This insight allows us to address issues and optimize our processes for improved performance quickly.

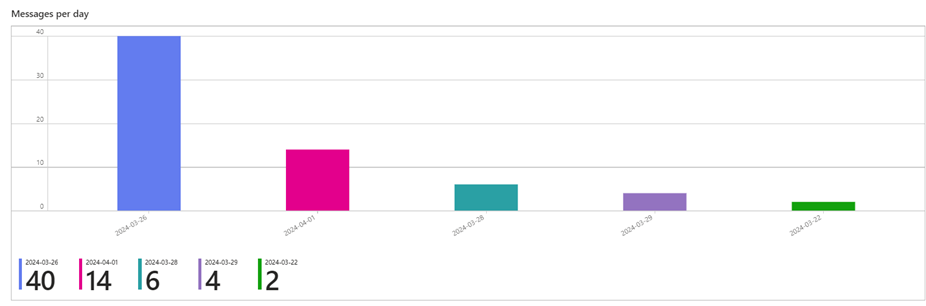

- Message Count Monitor: Understanding system activity and workload requires keeping track of the number of messages sent on a specific date. This dashboard accurately represents message volume over time, which helps with capacity planning and resource optimization.

These dashboards are an essential resource for monitoring and managing our Azure infrastructure. By leveraging Log Analytics capabilities, we can ensure our cloud environment’s dependability, security, and efficiency.

Summary

In this guide, we used Terraform modules to deploy Azure services, which improved clarity, organization, and reusability. The modular approach promotes collaboration, simplifies maintenance, and encourages code reuse. By configuring a remote backend, we ensure secure storage of the Terraform state file, allowing for seamless collaboration. Additionally, we explored how Log Analytics helps monitor these resources. Finally, using Terraform’s modular approach streamlines infrastructure management, encourages best practices, and enables teams to develop ideas more efficiently.