The conventional view of Quality Assurance in energy software is straightforward: QA teams find bugs before release, validate that code meets specifications, and ensure systems don’t crash. It’s a technical checkpoint – necessary, but fundamentally a support function. Most organizations treat QA for European electricity market systems the same way they’d treat QA for any enterprise software.

This view is dangerously outdated. Over the past decade, we’ve watched Europe’s energy infrastructure undergo a transformation that has fundamentally changed what ‘failure’ means.

A software defect in a market-clearing algorithm doesn’t just cause inconvenience – it can trigger price spikes across 27 countries, strand renewable generation worth millions, or create cascading failures that culminate in blackouts affecting tens of millions of people. The April 2025 Iberian blackout, which left 55 million people without power, wasn’t caused by a storm or equipment failure – it was a system coordination problem.

The organizations that understand this have quietly redefined what QA means in this sector. They’ve moved QA from the end of the development cycle to the center of risk management. They’ve shifted from ‘finding bugs’ to ‘validating economic outcomes, regulatory compliance, and physical grid constraints simultaneously.’ This isn’t QA as most people understand it – it’s a fundamentally different discipline that we’ve come to call strategic quality assurance.

We learned this firsthand during extreme-scenario testing, when a combination of scarce bids and activation constraints produced a price spike that was technically valid but exceeded predefined scarcity pricing logic boundaries. The system behaved according to code, yet the result would have triggered market monitoring investigations and possible intervention.

Why Most Organizations Still Get This Wrong

Before explaining our approach, it’s worth acknowledging why the conventional view persists – because it’s not irrational.

The argument for treating energy market QA like standard enterprise software QA goes something like this: ‘These are mature systems with established protocols. The algorithms are mathematically proven. TSOs and NEMOs have been operating for years without catastrophic failures. Adding expensive domain specialists to QA teams is overkill when standard testing practices have worked fine.’

There’s truth here. European electricity markets have achieved remarkable reliability. The EUPHEMIA algorithm clears day-ahead markets worth over EUR 200 million daily across 27 countries. XBID enables continuous intraday trading across 25 markets. These systems work. Why change what’s working?

The answer lies in what’s changed around these systems – and what’s still changing.

The Four Forces Redefining Failure

The operational environment for European electricity markets has fundamentally shifted. Understanding these forces explains why traditional QA approaches are no longer sufficient.

1. Decentralization at Scale

The integration of millions of Distributed Energy Resources – solar installations, battery systems, electric vehicles, Virtual Power Plants – has exploded the number of market participants and transaction volumes. Systems designed for hundreds of large generators now handle millions of small, variable resources. Each new participant is a new potential source of data anomalies, timing conflicts, and edge cases that traditional testing never anticipated.

In earlier system versions, procurement processes ran periodically with relatively stable workloads. Today, clients are moving toward multiple procurement cycles per day, multiplying operational events many times over within a few years. This required us to redesign test strategies around continuous regression, parallel execution, and strict timing validation.

2. The Velocity Problem

The shift to 15-minute Market Time Units (MTUs) quadrupled the operational tempo overnight. Systems that once had an hour to process, validate, and clear auctions now have fifteen minutes. This isn’t just ‘faster’ – it’s a fundamentally different testing challenge. Latency issues that were invisible at hourly resolution become critical failures at 15-minute resolution. Race conditions that never occurred now happen daily.

3. Volatility as the New Normal

Negative prices. Sudden bid scarcity. Ramping scenarios where generation must swing by gigawatts in minutes. These aren’t edge cases anymore – they’re Tuesday. Systems must handle extreme price events without degrading, which means QA must validate behavior across a vastly expanded range of market conditions that simply didn’t exist a decade ago.

4. Compliance as Code

The Clean Energy Package transformed principles into metrics. The requirement for TSOs to offer at least 70% of transmission capacity for cross-zonal trade isn’t a guideline – it’s an auditable KPI embedded in software. Capacity calculation tools must be validated not just for correctness, but for regulatory defensibility. A calculation that’s technically accurate but procedurally non-compliant is still a violation.

These forces don’t just add complexity – they fundamentally redefine what failure means. A bug is no longer a software defect. It’s a potential market-destabilizing event.

The Three Dimensions of Strategic QA

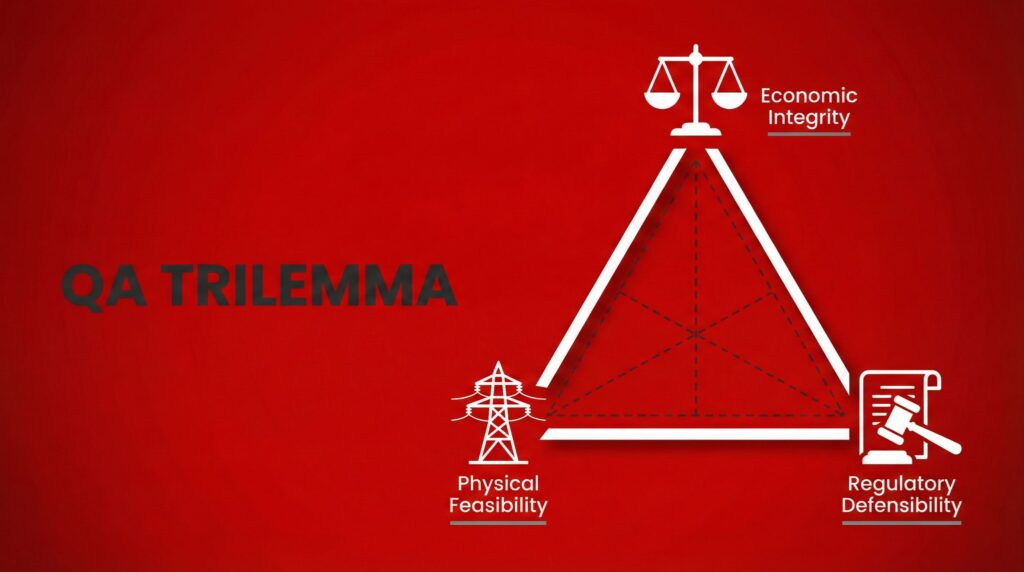

In this environment, validating that software ‘works correctly’ is necessary but nowhere near sufficient. A market result can be technically valid yet economically wrong. It can be economically optimal yet physically impossible. It can be both economically and physically correct yet regulatory non-compliant. Strategic QA must validate all three dimensions simultaneously.

The QA Trilemma: In European electricity market systems, every validation must satisfy three interdependent constraints: economic optimality, physical feasibility, and regulatory compliance. Optimizing for any two while ignoring the third creates systemic risk.

Dimension 1: Economic Integrity

EUPHEMIA and XBID aren’t just software – they’re the economic engines determining how EUR 200+ million changes hands daily. QA must verify that outcomes maximize social welfare within constraints, that congestion rent is calculated correctly, that marginal prices align with binding grid constraints, and that cross-zonal capacity is never silently over-allocated.

This requires QA engineers who understand not just software testing, but market economics. They need to recognize when a ‘passing’ test produces economically nonsensical results – negative welfare outcomes that the algorithm technically permits but practically shouldn’t occur.

We once caught a scenario where equal-priced bids were sorted using a secondary technical parameter that wasn’t aligned with market priority rules. The system produced a valid activation list, but it would have changed which providers were dispatched, affecting revenues and perceived fairness. Standard functional testing wouldn’t have flagged it.

Dimension 2: Physical Feasibility

The shift from Net Transmission Capacity (NTC) to Flow-Based Market Coupling added an order of magnitude to validation complexity. Flow-Based models consider power flow interactions across multiple grid elements simultaneously. A trade that looks correct in price terms might violate physical constraints on a transformer three countries away.

Invisible Risk: The most dangerous defects in electricity market systems are those that appear correct on the surface – trades that clear, prices that seem reasonable – while silently violating physical grid constraints. These defects pass standard testing because standard testing doesn’t know to look for them.

Detecting invisible risk requires domain expertise that most QA organizations don’t have. You need engineers who understand power flow physics, not just test case execution.

Dimension 3: Regulatory Defensibility

Unlike most software, electricity market systems operate under prescriptive regulatory frameworks that specify exactly how systems must behave under specific conditions. Failure scenarios aren’t edge cases – they’re first-class requirements defined by CACM Regulation, network codes, and national implementations.

QA must validate not just that systems handle failures, but that they handle them exactly as prescribed. The fallback procedures, the timeout behaviors, the capacity reporting formats – all must be precisely compliant. A system that gracefully recovers from failure but does so in a non-compliant way hasn’t passed testing.

When stricter result publication timing rules were introduced, we had a short implementation window to validate performance, sequencing, and fallback behaviors across multiple production-like environments. The time-synchronized test orchestration approach we developed for that program became our template for validating deadline-driven regulatory changes.

The Seams Where Systems Break

From a systemic risk perspective, the most dangerous vulnerabilities aren’t within individual platforms – they’re at the boundaries between them. European electricity markets are a network of interconnected systems operated by TSOs, NEMOs, DSOs, aggregators, and thousands of market participants. Each boundary is a potential failure point.

Interoperability testing has evolved far beyond validating that APIs return correct responses. The real challenge is achieving what we call ‘system alignment under real-time pressure’ – ensuring all parties interpret the same data the same way, at the same time, under stress conditions.

During interoperability testing between central and participant systems, we identified a retry-mechanism mismatch: one side automatically resent messages after timeout, while the receiving system interpreted duplicates as new business events. Nothing was technically “wrong” at API level, but under load this would have generated duplicate transactions. This taught us to always test failure and retry behavior jointly across systems, not in isolation.

Multi-party Trial Periods and Member Testing exercises are designed to surface these integration risks before go-live. But coordinating validation across dozens of organizations, each with their own systems and timelines, requires a discipline that goes beyond traditional QA project management.

The Dividends of Strategic QA

When strategic QA works, the outcome is paradoxically invisible: an absence of incidents, headlines, and emergencies. The grid operates. Markets clear. Prices form correctly. Nothing makes the news.

Engineered Silence: The ultimate measure of QA success in critical infrastructure isn’t test coverage or defect counts – it’s the absence of operational incidents. Effective QA creates the conditions for nothing to happen.

This engineered silence enables tangible outcomes:

Market Integrity

Rigorously validated auction algorithms produce outcomes that regulators and participants can trust. This trust is the foundation of market liquidity. Without it, participants hedge excessively, spreads widen, and efficiency suffers.

Regulatory Certainty

Systematic validation creates auditable evidence that systems operate as mandated. When regulators ask ‘how do you know your capacity calculations are compliant?’, strategic QA provides the answer.

During a pre-go-live readiness review by market oversight bodies, our test documentation demonstrated that all fallback and decoupling procedures behaved exactly as prescribed. The evidence shortened the approval phase and allowed the client to enter operations on schedule instead of facing an additional review cycle.

Grid Security

Accurate validation of balancing platforms and frequency reserves directly contributes to the 50 Hz stability that keeps the lights on. The 99.9% uptime that European grids achieve isn’t an accident – it’s engineered through rigorous validation of every component in the chain.

Economic Efficiency

Well-tested balancing platforms reliably activate the most cost-effective bids from across Europe. This isn’t just operational convenience – it’s measurable welfare maximization. Poorly tested systems that can’t reliably clear optimal bids cost consumers real money.

Automation-focused validation reduced the need for manual operator correction during tight balancing windows. The improved response consistency led to measurable cost efficiency gains in high-volatility periods, estimated at €4–5 million annually.

The Foundation for Europe’s Energy Future

The European energy transition depends on software that didn’t exist a decade ago operating at scales and speeds that would have been unimaginable. Decarbonization targets require integrating millions of variable renewable resources. Decentralization means coordinating thousands of market participants in real-time. Digitalization means that software failures have physical consequences measured in megawatts and millions of euros.

In this environment, QA for European electricity market systems cannot remain a technical checkpoint. It must become what it has quietly become for the organizations that take it seriously: a strategic function that validates the precarious interplay between market economics, regulatory mandates, and the immutable physics of the power grid.

Over nine years working with TSOs across Europe, we’ve developed a systemic validation methodology that treats market platforms not as isolated applications but as socio-technical systems where algorithms, regulations, and grid physics intersect. Our differentiator is combining deep failure-mode testing with regulatory traceability and operational realism. If you’re navigating similar complexity in critical infrastructure systems, the conversation is worth having. The organizations that understand this aren’t just testing software. They’re engineering the silence that keeps the grid running.