Can you teach an LLM a new trick with just a handful of examples. It sounds like magic, right? And, in a way, it is. Few shot prompting can enhance a model’s accuracy by providing clear examples of the desired output, reducing the need for mountains of data and months of training time.

But pile on too many examples, and things start to feel like we’re back in the dial-up days of the internet. That golden age of technology.

So, what’s supposed to be light and efficient becomes cluttered and clunky. That’s why dynamic few-shot prompting is a cool new way to solve a persisting problem. It lets us keep models nimble, finding that sweet spot between learning and overload.

In this post, we’ll walk through how to make dynamic few shot prompting work using open-source models from Hugging Face. We’ll bring this technique to life with a real-world example, showing just how effective and efficient it can be.

Ready to see it in action? Let’s get started.

What is dynamic few shot prompting technique?

Dynamic few-shot prompting is a method that improves how language models select and use examples when completing tasks. Instead of relying on the same set of examples for every situation, it adapts and picks the most relevant ones based on the specific prompt.

Key Features

- Adaptive Example Selection

The technique adjusts which examples are used based on the context of the task. This ensures the model focuses only on the examples that are most helpful for the current situation. - Efficiency

By using fewer, highly relevant examples, dynamic few-shot prompting reduces the size of prompts. This approach saves computational resources and avoids overloading the model with unnecessary information. - Improved Performance

Selecting context-specific examples helps the model produce more accurate and relevant responses, even when minimal data is available.

How It Works

- The model evaluates the prompt and identifies what kind of task it needs to handle.

- It retrieves a small set of examples that closely match the requirements of the task.

- These examples are used to guide the model’s response, ensuring accuracy and relevance.

Why It Matters

Dynamic few-shot prompting allows models to adapt quickly to new tasks. This technique balances computational efficiency with performance, making it ideal for applications that require fast and reliable responses with limited data.

Benefits at a Glance

- Context-Aware: Examples are selected dynamically, improving relevance.

- Resource-Saving: Reduces prompt size and computational load.

- Versatile: Works well for a wide range of tasks with minimal data.

Dynamic few-shot prompting combines adaptability with efficiency, helping language models deliver better results in real-time scenarios.

The Challenge: Improving AI Agent Accuracy

Imagine a customer service company that relies heavily on AI agents to handle a large volume of inquiries. These AI agents are expected to understand and respond to a wide range of customer queries accurately. However, the company has been facing issues with the AI agent’s performance. The responses are often generic, sometimes irrelevant, and occasionally incorrect. This not only frustrates customers but also undermines the company’s reputation.

The primary challenge here is to enhance the AI agents’ ability to understand and respond to queries more accurately and contextually. Traditional training methods have their limitations, especially when dealing with diverse and nuanced customer interactions. This is where few-shot prompting comes into play.

Architecture

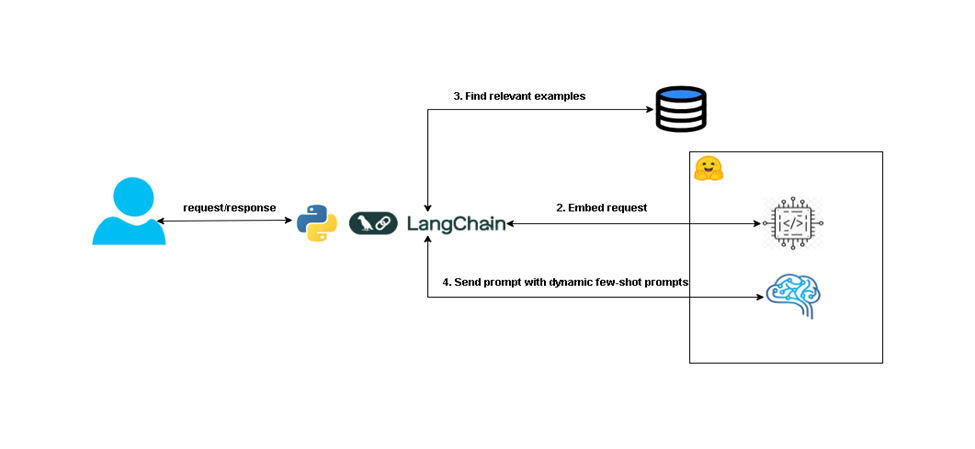

The diagram above shows the overall architecture of the solution. Let’s break down each component.

- Vector Store The store will hold a few-shot prompt examples. It is indexed by each example input and the content is the input/output pair.

- Embedding Model – This model is responsible for transforming the user input into a vector representation, which can be used to query the vector store, based on similarity search calculated by a chosen distance method (i.e. Cosine distance, Manhattan, Euclidean distance, etc.)

- HF Model – This is the model we will be using for the AI Agent, it’s the one responsible for providing answers to the user.

Use Case: Enhancing Customer Support with Dynamic Few Shot Prompting

To demonstrate how dynamic few-shot prompting can be used, let’s consider a scenario where we have a company that uses a large language model and an AI Agent to handle customer support inquiries:

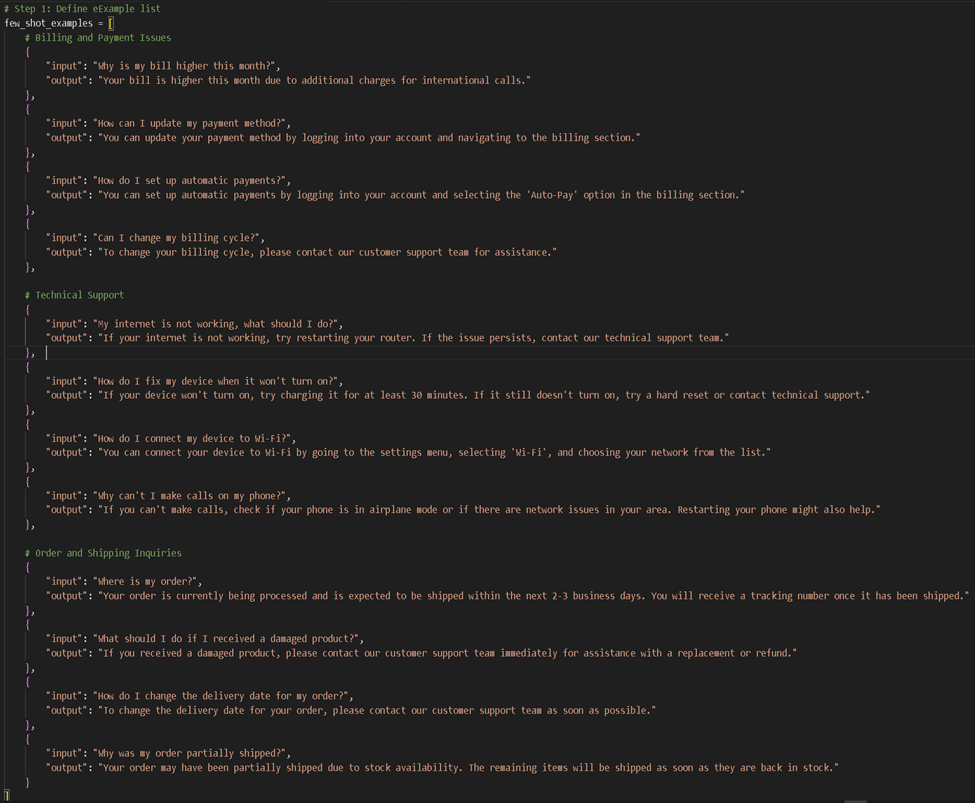

- Billing and payment issues

- Technical Support

- Order and shipping inquiries

For each of these tasks, we want to provide a number of examples to better guide the model what it should do. A simple way of doing this is to provide all examples related to these tasks in the prompt itself. However, this strategy comes with a few downsides:

- Information Overload: Too many examples can overwhelm the model, making it difficult to determine the main request.

- Confusion: The model might get confused and generate responses that are off-topic or irrelevant.

- Accuracy Issues: The focus on examples can detract from the accuracy of the response.

- Cost: More examples mean more tokens to be processed by the model, which increases cost.

Instead, by using dynamic few-shot prompting, we can just use the most relevant examples (for the given user input) in the prompt. For example, we can decide that we only want to use the top 3 most relevant examples in the prompt.

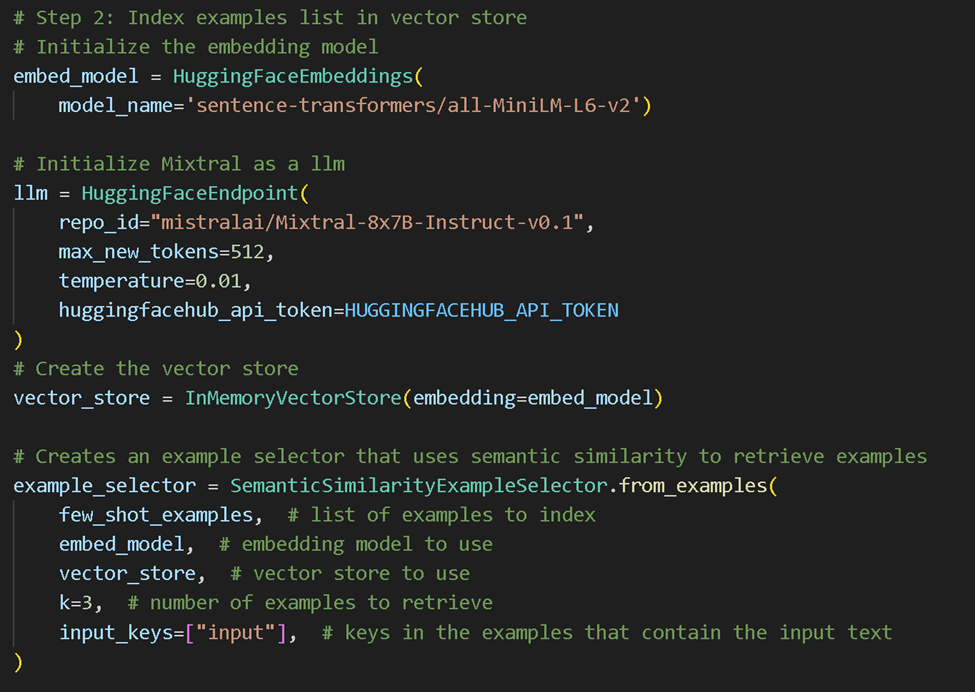

In this solution we used:

- The SemanticSimilarityExampleSelector class from langchain_core package

- The InMemoryVectorStore class from langchain_core package.

- HuggingFace – OpenSource platform for hosting LLMs and Embedding models.

- Embedding Model – all-MiniLM-L6-v2

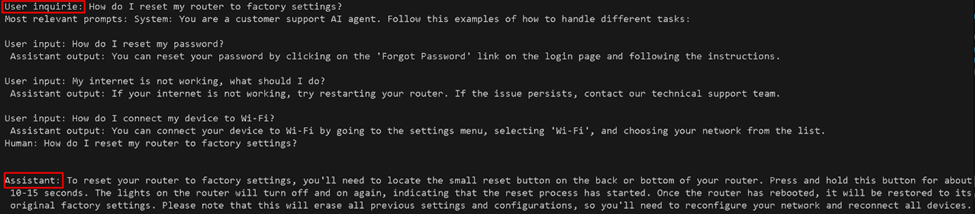

Implementation Steps

1. Define Example List

- Each example is an object that contains an input (user question example) and an output (assistant response for that question)

2. Index examples list in vector store

- Index key is the embedded example’s input

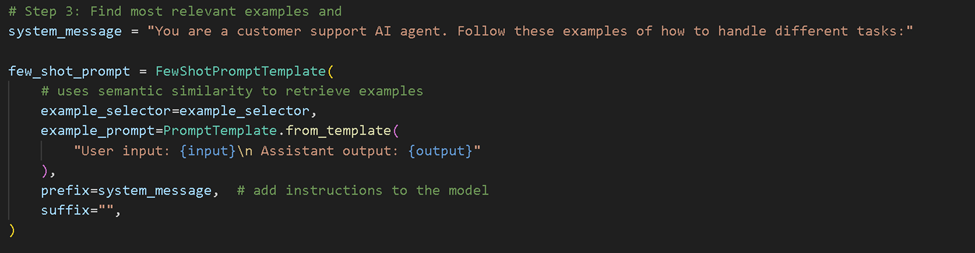

3. Find the most relevant examples.

- Embed the user input using the same embedding model used in the previous step.

- Use the embedded user input to find the most relevant examples in the vector store using one of the similarity search methods.

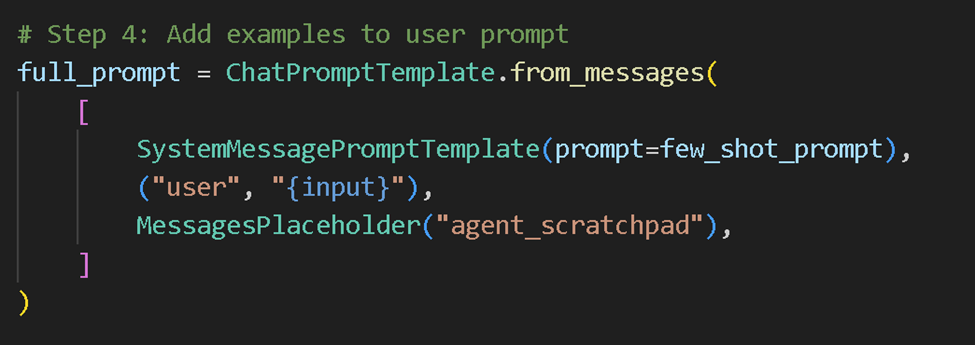

4. Add examples in the prompt.

- Adds example inputs as a user message and its output as an assistant message.

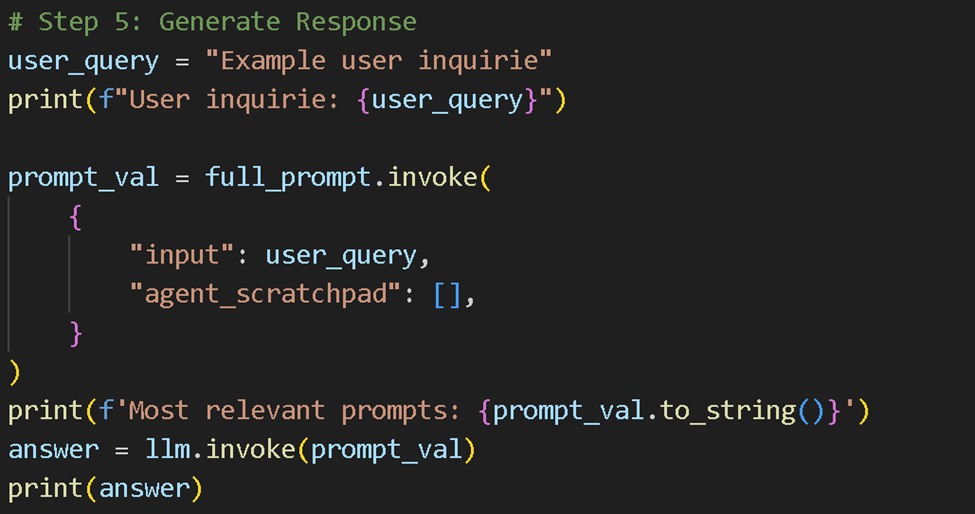

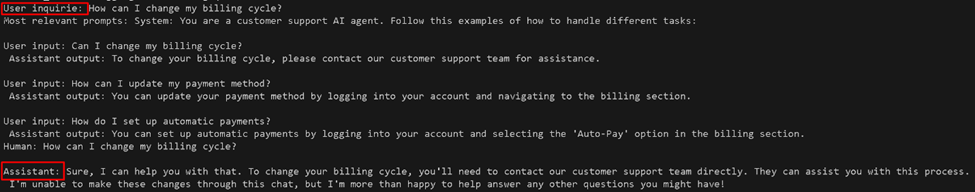

5. Generate Response

- Use the retrieved examples to generate a response.

Code Execution

Pre-Requisites

- Python 3.X

- HuggingFace account, with a valid access token to that account.

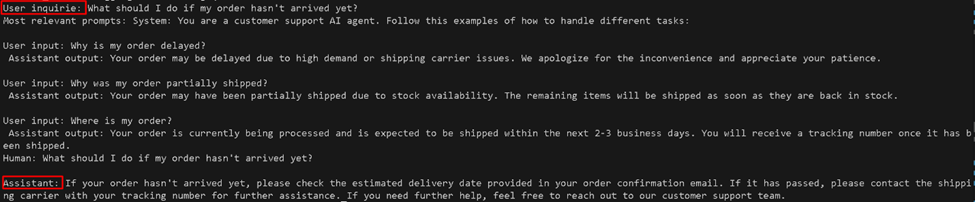

Use Case Examples

- Billing and payment example:

- Technical Support example:

- Order and shipping example:

Conclusion

The dynamic few shot prompting technique represents a significant improvement over traditional few-shot learning. By leveraging a vector store and an embedding model, this method ensures that only the most relevant examples are included in the prompt, optimizing its relevance, size, and ultimately cost. This approach not only maintains the efficiency and effectiveness of few-shot learning but also enhances the model’s ability to generate accurate and contextually appropriate responses.

As a result, users can achieve high-quality outcomes with minimal data, improving customer satisfaction and reducing response times. This approach also optimizes the use of computational resources and scales efficiently as the number of supported tasks increases. Making this technique a powerful tool for a wide range of applications. By following these steps and utilizing the provided code snippets, you can implement dynamic few-shot prompting in your projects, enhancing the performance and accuracy of your AI agents.