The search box used to be a modest little window—type in your words and pray for a match. Then it got clever. Now it sees meaning beneath the text, hunts for synonyms when you’re stuck, and even recognizes pictures.

Somewhere along the line, searching morphed from a rigid guessing game into something more intuitive.

Let’s discuss how search evolved and how does that look like under the hood.

Keyword Search

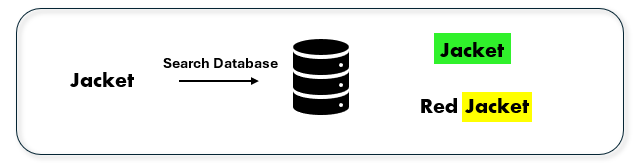

We are familiar with the standard way of searching for something via text. This is where we check to see if there is an exact or partial match between the textual input and the data in some database or another kind of storage. This is also known as keyword searching.

Figure 1: Example of exact and partial match of Keyword Search

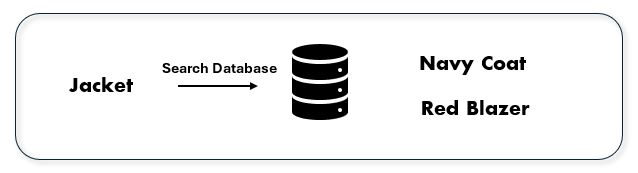

One possible problem with this kind of search is when we want to search for something, and we don’t know the exact name of the thing we are searching for. For example, searching for a jacket but inside the database we have jacket-like products like coats or blazers that won’t match with the classic keyword search.

Figure 2: Example of similar words that won’t match with Keyword Search

Contextual / Vector Search

There is a more advanced way of searching a database where words/sentences don’t have an exact or partial match when performing a keyword search. This is done with a vector or contextual search.

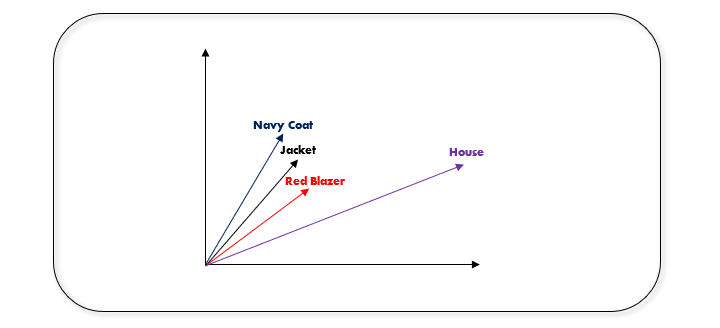

Vector searching is where we represent the words or sentences as vectors in a vector space. This is done with embedding models that are trained on textual data, which take a textual input and output its corresponding vector representation. We do this because words with similar meanings have vector representations that are close to each other in the so-called vector space. Such an example can be seen in Figure 3.

Figure 3: Example of similar and dissimilar words in vector space

This is a great way of searching when we are not sure of the correct word or sentence to use when searching for something.

Hybrid Search

When using vector searching, we are only looking at the similarities between the vectors without paying attention to exact or partial keyword matching. This can lead to possible poor outcomes when we know the exact word that we are searching for, but we are getting results that also feature similar words. In some cases, we may want to also include similar words, but what if we want to give a higher priority of words that are similar in vector space and are also an exact or partial match?

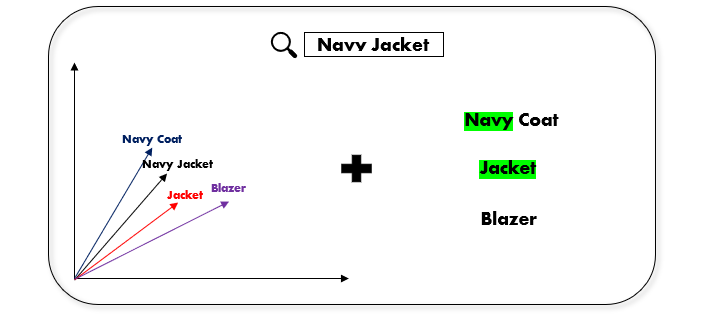

We can do this by introducing a hybrid search, which is a combination of a keyword and vector search. In this algorithm we would give a score for the vector similarity but also consider any potential exact or partial keyword matches. Such an example can be seen in Figure 4.

Figure 4: Example of Hybrid Search

When searching for a “navy jacket” we can see that the vector representations for all the words in the example are very similar, but there is only a keyword match with “navy coat” and “jacket”. This means that we would give a higher score for those words that perform well in both the vector and keyword search.

Image Search

Another very useful and convenient way to do a search is with an image input. There are lots of implementations of this image search algorithm, like Google Lens for example where you can search Google using an image, which in the background are not that different from the standard vector search.

Like we said for the vector search where text is embedded and represented as a vector, a similar approach is used to embed images and represent them as vectors. The difference is that there are different models that embed text or images. One example of an image embedding model is OpenAI’s CLIP model, which is a neural network trained on a variety of (image, text) pairs.

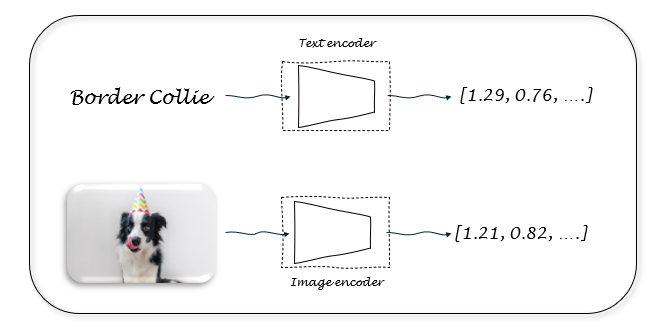

One very interesting thing about this embedding model is that since it is trained together on textual and image pairs, we can conduct image-to-image searching, but also text-to-image and image-to-text searching. Both text and image embeddings created with the CLIP model are represented in the same vector. As seen in Figure 5, different encoders of the CLIP model are used to perform the image and text embedding, but the resulting vectors for the text “Border Collie” and the image of a “Border Collie” will be very similar.

Figure 5: OpenAI CLIP text and image embedding

Use cases

E-commerce

Personalized product recommendations and similar product searches.

- Keyword Search Limitations: Users often describe products differently, e.g., “red dress” vs. “crimson evening gown.”

- Vector Search Impact: Captures semantic meaning, matching user intent and returning products even if descriptions differ.

Visual search for similar items.

- Image Search Impact: Process image embeddings, enabling “find similar” functionality for products based on appearance.

Healthcare

Patient symptom analysis and matching to conditions.

- Keyword Search Limitations: Medical terminologies vary (e.g., “stomachache” vs. “abdominal pain”).

- Vector Search Impact: Semantic matching links patient descriptions to relevant diagnoses or studies.

Clinical trial participant matching.

- Vector search aligns unstructured patient profiles with trial eligibility criteria more effectively than keyword-based systems.

Visual search for medical conditions.

- Image Search Impact: Allowing users to search a database of medical conditions with an image of an unknown condition, for example allergic reactions or skin rashes.

Media and Entertainment

Content recommendations (movies, music, podcasts).

- Vector Search Impact: Analyzes user preferences using embeddings, enabling suggestions based on mood, genre, or theme rather than direct keywords.

Detecting duplicates or finding similar media.

- Image Search Impact: Image, audio, or video embeddings help identify similar or duplicate files, even if metadata is incomplete. Additionally, finding movie names by searching with an image of a scene in the movie.

Finance

Fraud detection in transactions.

- Vector Search Impact: Vector embeddings can capture transaction patterns and identify anomalies without relying on explicit fraud keywords.

Analyzing investor sentiments.

- Embeddings of news, reports, and social media capture nuances in sentiment beyond exact matches of financial terms.

Education and Research

Semantic academic article search.

- Vector Search Impact: Helps researchers find papers related to a concept (e.g., “neural networks for translation”) even when exact terminology differs.

Personalized learning recommendations.

- Suggests courses, materials, or exercises based on student learning behavior and goals, not just keyword matches.

Legal

Legal document discovery.

- Vector Search Impact: captures intent and legal context, surfacing related cases or clauses that might not contain the same words.

Contract comparison.

- Finds clauses with similar meanings but different wording, useful in contract negotiations or compliance checks.

Final Thoughts

Search isn’t just about matching words anymore—it’s about understanding. Machines are creeping closer to how we think, not just what we type. Contextual search guesses what we mean when we fumble for the right term. Hybrid search balances precision with intuition. Image search? It cuts straight to the point—no words needed.

But the better search gets, the lazier we become. We don’t adapt to machines anymore—they adapt to us. It won’t be long before searching feels less like digging and more like knowing. The only question left is what happens when machines start finding what we need before we even ask.