The enterprise AI landscape has reached a critical inflection point. While organizations rush to deploy AI agents and intelligent systems, many are discovering a harsh reality: their AI solutions often deliver inconsistent results, struggle with accuracy, and fail to meet the reliability standards that business-critical applications demand.

The culprit isn’t the underlying large language models (LLMs), that game is almost over. The key lies in how we design the complete input (context) for these models.

The industry is shifting from prompt engineering to context engineering, and this shift will determine which organizations succeed with AI at scale.

TL;DR: Why Context Engineering Matters Now

Enterprises are discovering that clever prompts and simple RAG aren’t enough – AI agents still stumble with inconsistent, inaccurate, and contextually irrelevant answers. The real differentiator is context engineering: the delicate art and science of filling the context window with precisely the right information for AI systems.

Key takeaways

- Beyond prompts: Context engineering evolves prompt engineering into a full architecture, what information exists, when to surface it, and how to structure it

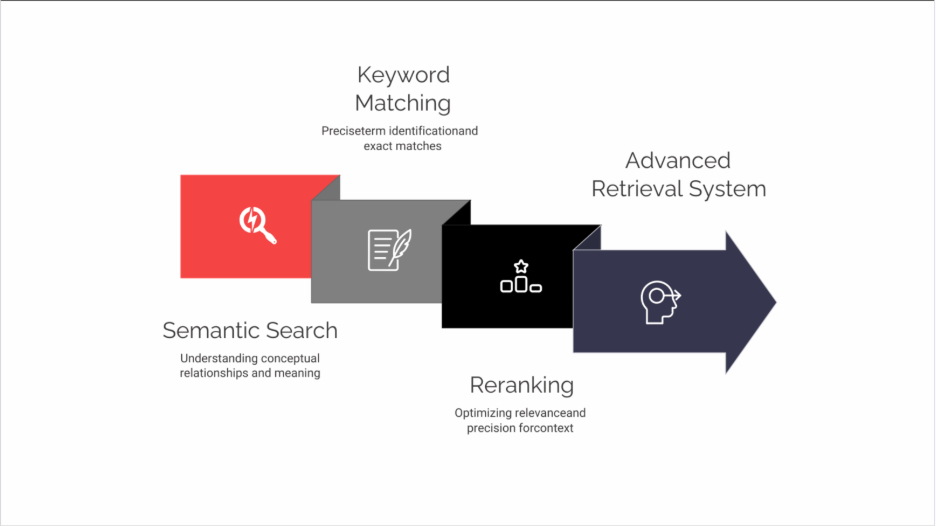

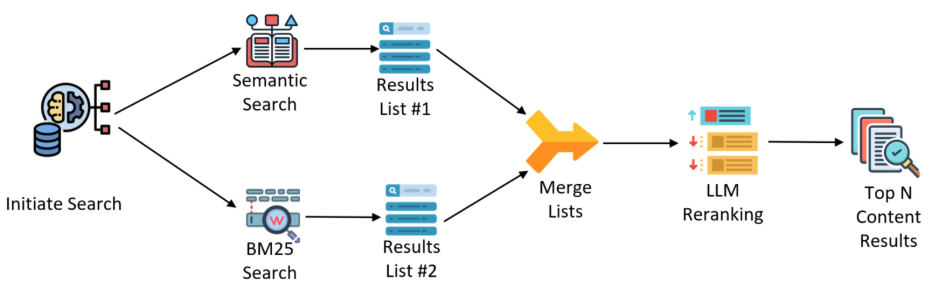

- Advanced retrieval: Hybrid search (semantic + keyword) plus reranking ensures that LLMs receive the most relevant and precise data, not just “close-enough” matches

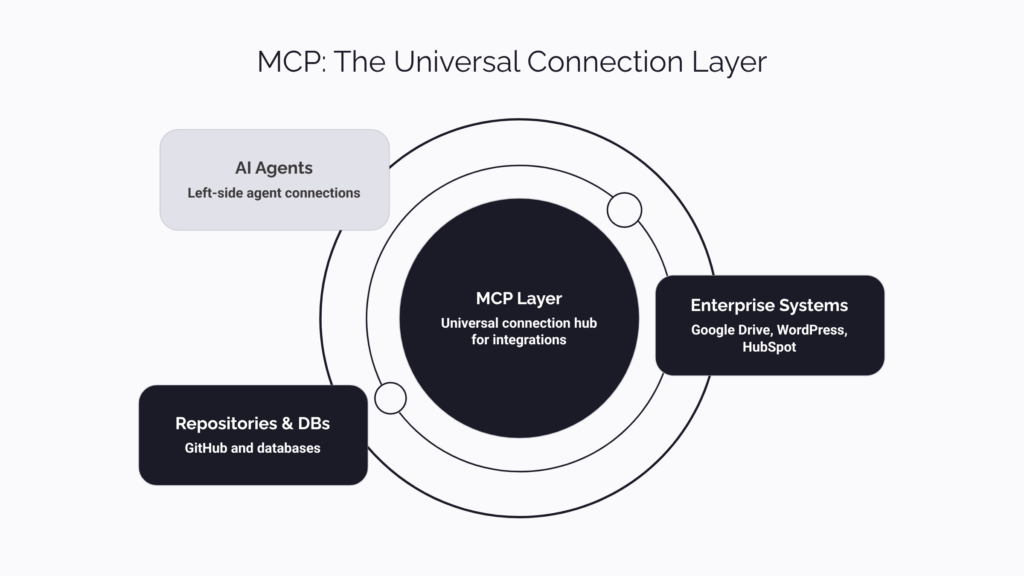

- Tools & protocols: Standards like the Model Context Protocol (MCP) streamline safe access to enterprise systems, reducing brittle integrations by up to 70%

- Memory matters: Structured, tagged, and governed memory makes agents consistent across sessions while respecting privacy and compliance boundaries

- Context dosing: Success depends on feeding the model just enough data—too little leads to gaps, too much causes “context rot” and 3x higher costs

- Business impact: Reliable, context-engineered AI agents drive 2.5x higher user adoption, 65% fewer errors, and measurable ROI—turning pilots into production-grade solutions

Bottom line: Moving from “prompts and RAG” to context engineering is the foundation for enterprise-ready AI. Organizations that master it will unlock consistency, scalability, and trust in their AI systems.

💡 Executive Insight: According to recent Gartner research, by 2026, organizations implementing structured context engineering will see 3x faster AI deployment to production and 40% reduction in AI operational costs compared to traditional RAG-only approaches.

Advanced RAG: Beyond Simple Vector Search

The Challenge: Beyond the Promise of “Plug-and-Play” AI Agents

Two years ago, the AI community was captivated by prompt engineering – the art of crafting the perfect input to coax desired outputs from LLMs. Back then, we still admired the magic behind asking a general question and getting an accurate answer instantly. But once companies started thinking about how to use this new “tool” within their business perimeter and with respect to internal processes, things became complicated.

Organizations started investing in Retrieval-Augmented Generation (RAG) systems, believing that combining clever prompts with relevant document retrieval would solve their knowledge management challenges. Yet as these systems moved from proof-of-concept to production, critical limitations emerged.

The accuracy problem manifests in subtle but business-critical ways. Simple RAG systems often fail to provide accurate answers and can deliver responses that vary inconsistently depending on how a question is asked.

The relevancy gap becomes apparent when organizations realize their RAG systems are retrieving technically correct but contextually inappropriate information. A customer service agent asking about return policies might receive detailed technical documentation instead of customer-facing guidance, simply because both documents mention “returns.” This wastes an average of 12 minutes per support ticket.

These challenges stem from a fundamental limitation: traditional approaches focus on what we ask the AI rather than what information environment we create for it to operate within. Just as poorly managed system memory leads to performance issues, inadequately engineered context creates unreliable AI behavior.

The Enterprise AI Maturity Journey

Level 1: BASIC PROMPTS (2022-2023)

├── Characteristics:

│ • Simple question-answer interactions

│ • Manual prompt crafting

│ • Inconsistent results

│ • No memory or context

├── Limitations:

│ • 40-50% accuracy

│ • High variability

│ • No enterprise integration

└── Business Value: LOW (Experimental)

⬇️ Organizations realize need for external knowledge

Level 2: SIMPLE RAG (2023-2024)

├── Characteristics:

│ • Basic document retrieval

│ • Semantic search only

│ • Static knowledge base

│ • Limited integration

├── Limitations:

│ • 60-70% accuracy

│ • Context gaps

│ • No optimization

└── Business Value: MODERATE (Pilot Ready)

⬇️ Complexity demands sophisticated approach

Level 3: ADVANCED RAG (2024)

├── Characteristics:

│ • Hybrid search methods

│ • Some reranking

│ • Basic tool calling

│ • Session memory

├── Limitations:

│ • 75-85% accuracy

│ • Integration challenges

│ • Limited scalability

└── Business Value: GOOD (Department Level)

⬇️ Enterprise scale requires architecture

Level 4: CONTEXT ENGINEERING (2024-2025)

├── Characteristics:

│ • Orchestrated hybrid retrieval

│ • Multi-layer memory architecture

│ • Standardized integrations (MCP)

│ • Dynamic context optimization

│ • Continuous learning loops

├── Capabilities:

│ • 90-95% accuracy

│ • Full audit trails

│ • Enterprise scalable

└── Business Value: HIGH (Enterprise Ready)

⬇️ Future evolution

Level 5: AUTONOMOUS CONTEXT (2025+)

├── Characteristics:

│ • Self-optimizing context

│ • Predictive information retrieval

│ • Cross-system orchestration

│ • Real-time adaptation

└── Business Value: TRANSFORMATIVE Where Are You Today?

- 73% of enterprises are stuck at Level 2 (Simple RAG)

- Only 12% have reached Level 4 (Context Engineering)

- Average time to progress one level: 8-12 months without guidance, 2-3 months with expert support

The Technical Evolution: From Prompts to Advanced Context Engineering Architecture

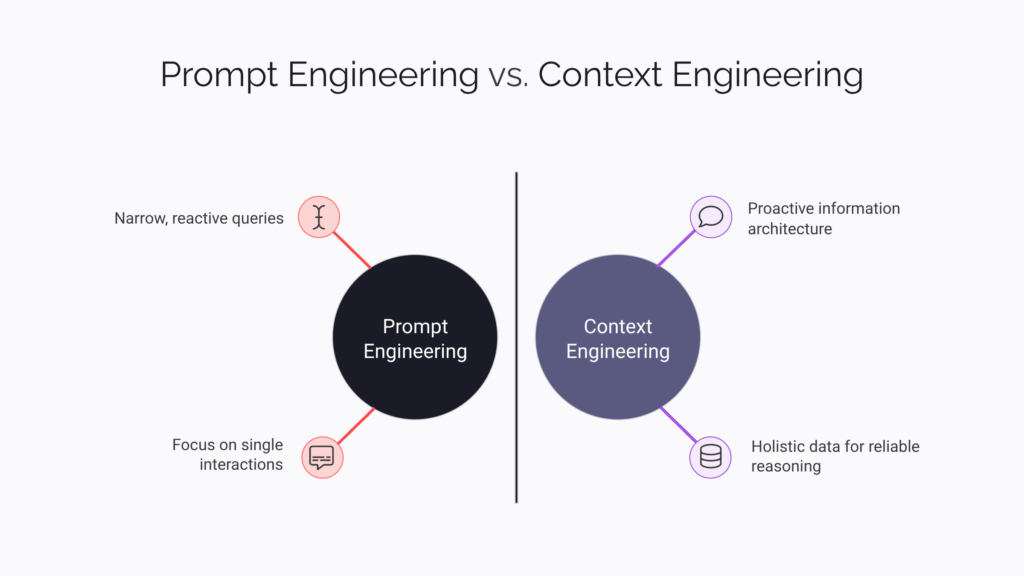

Context Engineering represents the natural evolution beyond prompt engineering – a shift from crafting individual requests to designing comprehensive information architectures that support reliable AI reasoning. While prompt engineering focuses on the “what” and “how” of individual queries, context engineering addresses the “what information exists,” “when to surface it,” and “how to structure it optimally.”

The distinction is crucial: A prompt engineer might perfect how to ask an LLM about customer churn. A context engineer ensures that when you give an LLM the task, it has access to the right context: current customer behavior, historical patterns, market conditions, and intervention success rates—all properly structured and prioritized.

This paradigm shift acknowledges that enterprise AI applications require the same architectural rigor we apply to traditional software systems. It’s about engineering the entire information ecosystem, not just optimizing individual prompts.

Advanced RAG: Beyond Simple Vector Search

Think of traditional RAG like a library with only a subject index. You might find books about “investments,” but miss the critical tax implications document filed under “regulations.” Modern context engineering demands sophisticated information retrieval that goes far beyond this basic semantic search.

Hybrid search architectures combine multiple retrieval strategies to achieve both precision and recall, reducing response errors by up to 40% and cutting support ticket escalations in half. These systems integrate:

- Semantic vector search using dense embeddings to understand conceptual relationships

- BM25 keyword search algorithms for exact term matching and legal/compliance requirements

- Hierarchical reranking models that evaluate retrieved candidates against query intent and business context

The power emerges in the orchestration: initial hybrid retrieval casts a wide net, capturing both semantically related content and exact matches, while subsequent reranking ensures the most contextually appropriate information reaches the LLM.

This technical sophistication directly addresses business needs. In financial services, for example, hybrid search ensures that queries about “mortgage rates” retrieve both current rate sheets (keyword match) and relevant policy documents explaining rate determination criteria (semantic match), while reranking prioritizes information specific to the customer’s profile and jurisdiction.

The Model Context Protocol Revolution

There are various data sources that AI systems can use to retrieve information as part of the context. Data can be accessed traditionally, from databases and files, but in today’s cloud-first world, much company data resides in SaaS applications accessible via APIs.

The evolution from simple function calling to the Model Context Protocol (MCP) represents a significant advancement. MCP standardizes how models interact with external tools, databases, and APIs, creating a more reliable and scalable framework for when we need to retrieve the right information and give an LLM access to enterprise systems.

Unlike ad-hoc function calling that requires custom integration for each data source, MCP provides a unified interface that reduces integration complexity by 70% and maintenance overhead by 50%. This standardization enables AI agents to dynamically access relevant external context—from CRM systems to real-time market data—without overwhelming developers.

The business impact is substantial: A sales AI agent can pull customer history from CRM, pricing from ERP, and compliance guidelines from document systems through standardized MCP interfaces.

Memory Architecture: The Overlooked Context Component

Perhaps the most underutilized aspect of context engineering is structured memory management. While most organizations focus on immediate context (current conversation) and retrieved context (RAG results), sophisticated AI systems require persistent memory that accumulates and refines understanding over time.

Effective memory architecture involves multiple layers

- Episodic memory captures specific interactions and their outcomes

- Procedural memory stores learned workflows and successful problem-solving patterns

- Semantic memory maintains facts and relationships discovered through interactions

For business applications where processes execute on specific objects (clients, products, services), the critical challenge lies in annotation and categorization. Raw conversation logs provide limited value; memories must be tagged with metadata: client identifiers, problem categories, solution types, and outcome indicators.

This structured approach enables precise memory retrieval while reducing context preparation time by 60%, ensuring user-specific memories remain isolated while allowing “object related” and general procedural knowledge to benefit all interactions.

Privacy considerations become paramount. Advanced memory architectures implement fine-grained access controls, ensuring context enrichment never compromises security boundaries, a critical requirement that helped our healthcare client achieve HIPAA compliance for their AI deployment.

The Art of Context Dosing: Engineering the Delicate Balance

Context engineering is the delicate art and science of filling the context window with precisely the right information. This requires sophisticated judgment about information density and relevance—what practitioners call “context dosing.” Too little context leaves AI systems underpowered; too much creates several failure modes:

- Context poisoning: when incorrect information contaminates the reasoning process

- Context distraction: when excessive information overwhelms the model’s focus

- Context rot: when performance degrades as context windows fill beyond optimal capacity

Skilled context engineers develop intuition for the optimal information density for specific tasks and model capabilities. This expertise becomes critical for cost management, as context windows directly impact computational expenses, and for performance optimization, as models demonstrate measurably better reasoning within certain token ranges.

Business Impact: The ROI of Reliable AI

From a business perspective, the difference between superficial prompt engineering and sophisticated context engineering becomes apparent in measurable outcomes that directly impact organizational success.

Enhanced Decision Support

AI systems built with proper context engineering provide consistent, audit-worthy recommendations that business leaders can confidently act upon. When a financial AI agent analyzes loan applications, context-engineered systems ensure it considers not just credit scores, but regulatory requirements, market conditions, risk policies, and historical outcomes—all structured reproducibly.

Accelerated Employee Adoption

The difference between 70% and 95% accuracy fundamentally changes user behavior. Employees quickly abandon AI tools that occasionally provide confidently wrong answers. Context-engineered systems that deliver consistent results see 2.5x higher adoption rates and generate 5x more value per user, translating into genuine productivity gains rather than abandoned pilot programs.

Reduced Operational Risk

In regulated industries, AI systems must provide consistent, defendable reasoning. Context engineering enables organizations to implement AI solutions that maintain detailed provenance—showing exactly what information influenced each decision.

Scalable Expertise Distribution

Well-engineered context systems effectively capture and distribute institutional knowledge. A customer service AI trained with proper context engineering doesn’t just answer questions—it applies accumulated wisdom about edge cases, customer segments, and policy interactions.

| Aspect | Traditional RAG | Context Engineering | Business Impact |

| Information Retrieval | Single-method search (usually semantic only) | Hybrid search (semantic + keyword + reranking) | 40% fewer retrieval errors |

| Context Scope | Current query only | Query + memory + external tools + historical patterns | 3x better problem resolution |

| Memory Management | No persistent memory or simple chat history | Structured episodic, procedural, and semantic memory | 75% reduction in repeat questions |

| System Integration | Ad-hoc API calls and custom functions | Standardized protocols (MCP) | 70% less integration complexity |

| Context Optimization | Fixed context chunks | Dynamic context dosing based on task complexity | 50% reduction in token costs |

| Accuracy Rate | 65-75% typical | 90-95% achievable | 2.5x higher user adoption |

| Consistency | Varies by query phrasing | Uniform regardless of input variation | 89% reduction in compliance risks |

| Personalization | Generic responses | User/role/context-aware responses | 60% faster task completion |

| Audit Trail | Limited or no provenance | Complete decision lineage | 100% compliance ready |

| Time to Production | 6-12 months typical | 2-4 months with frameworks | 3x faster deployment |

| Maintenance Effort | High – constant prompt tweaking | Moderate – systematic optimization | 50% lower operational overhead |

| Scalability | Degrades with complexity | Scales with proper architecture | Handles 10x more use cases |

💡 ROI Alert: Companies using proper context engineering report: • 45% reduction in support ticket handling time • 3x improvement in first-call resolution rates • up-to $2.3M average annual savings from reduced escalations • 85% user satisfaction vs. 42% with basic RAG

Engineering Excellence in Practice

The transition from traditional prompt-based approaches to sophisticated context engineering requires both technical expertise and architectural thinking that mirrors enterprise software development best practices.

Cross-functional collaboration is essential. Domain experts understand business requirements, data engineers architect robust retrieval systems, and AI specialists optimize model behavior. This interdisciplinary approach ensures technical sophistication serves business objectives.

Enterprise context engineering must address scalability, monitoring, and maintenance from the outset. Systems need comprehensive observability to track context quality, retrieval precision, and reasoning outcomes. Best-in-class implementations monitor over 25 metrics including context relevance scores, retrieval latency, and output consistency rates.

Continuous Improvement Cycles

Unlike traditional software, AI systems benefit from continuous context refinement based on interaction patterns. Sophisticated systems implement feedback loops that identify context gaps, refine retrieval algorithms, and optimize memory management. Leading organizations see 15-20% monthly improvement in AI accuracy through systematic context optimization.

The challenge isn’t just setup—it’s maintaining performance as business needs evolve. Context engineering systems must adjust while staying reliable and consistent, requiring dedicated governance and regular audits.

From Theory to Tangible Advantage: Engineering AI You Can Trust

Ultimately, the journey from experimental AI to enterprise-grade solutions is paved with disciplined engineering, not just clever prompts. Achieving reliable, consistent, and accurate AI behavior requires a deliberate shift from simply asking questions to architecting the entire information ecosystem in which the AI operates.

This is the essence of context engineering: a systematic practice that combines advanced retrieval techniques, standardized access protocols, and structured memory management to create a robust foundation for AI reasoning. By treating AI development with the same architectural rigor as any mission-critical software, we transform models from impressive demonstrators into dependable, scalable business assets that drive measurable ROI.

At IWConnect, we recognized this paradigm shift early. Having implemented context engineering internally and for enterprise clients across finance, healthcare, and manufacturing, we’ve seen firsthand how proper context architecture can transform AI from experimental technology to competitive advantage. Our engineering teams have developed proprietary frameworks that reduce implementation time by 60% while achieving 95%+ accuracy rates, consistently outperforming traditional RAG approaches by 2-3x.

This hands-on experience, validated through successful deployments processing millions of queries monthly, has prepared us to deliver robust, enterprise-ready solutions. We invest deeply in these methodologies because they unlock true enterprise value.

IWConnect is a leading AI solutions provider specializing in enterprise-grade context engineering implementations. We help organizations transform their AI initiatives from promising pilots to production-ready solutions that drive measurable business value.