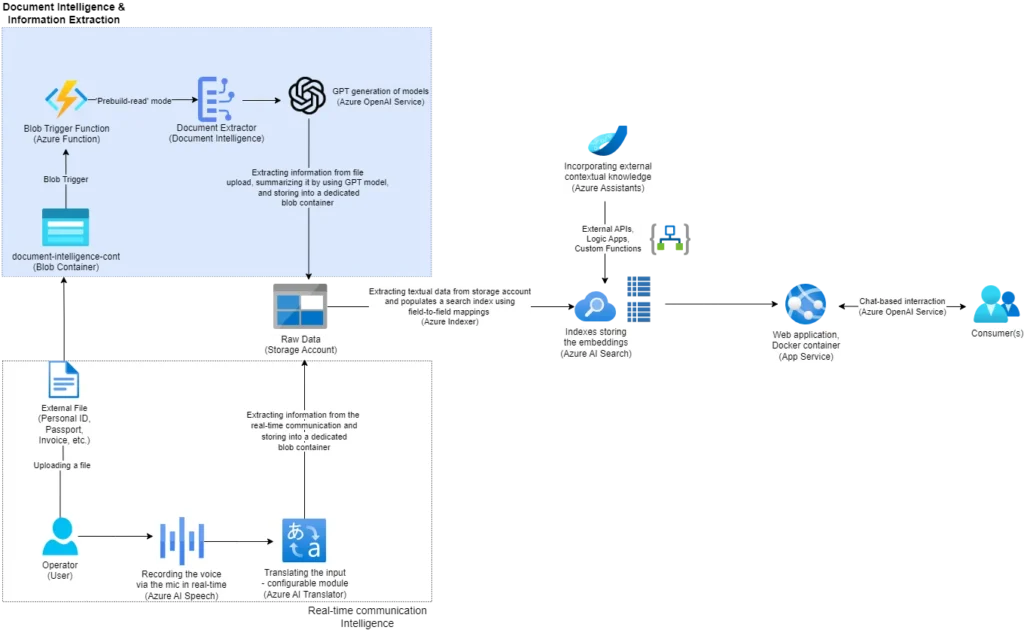

Building an Intelligent Document Processing Pipeline with Azure OpenAI and Azure AI (Cognitive) Services

Let’s continue our journey in designing and building a Cognitive Services-based cloud-native solution using the AI services from the Microsoft AI portfolio, as started in the previous article about Implementing Real-Time Speech Recognition, Translation, and Data Storage Using Azure Cognitive Services.

So far, I have used Azure AI Speech and Azure AI Translator services to establish real-time speech recognition, transcription, and translation. I also created a centralized blob storage location for storing the translations accordingly.

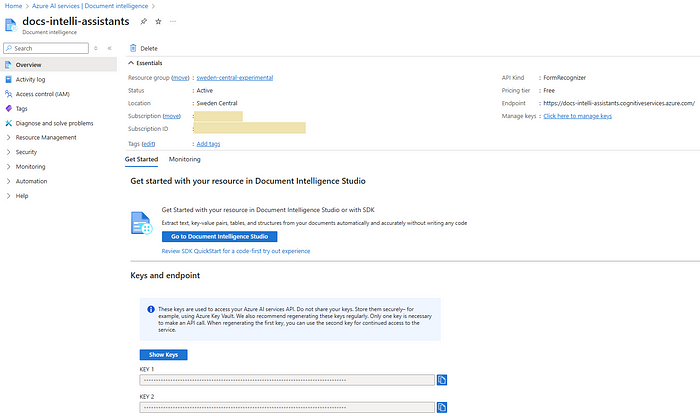

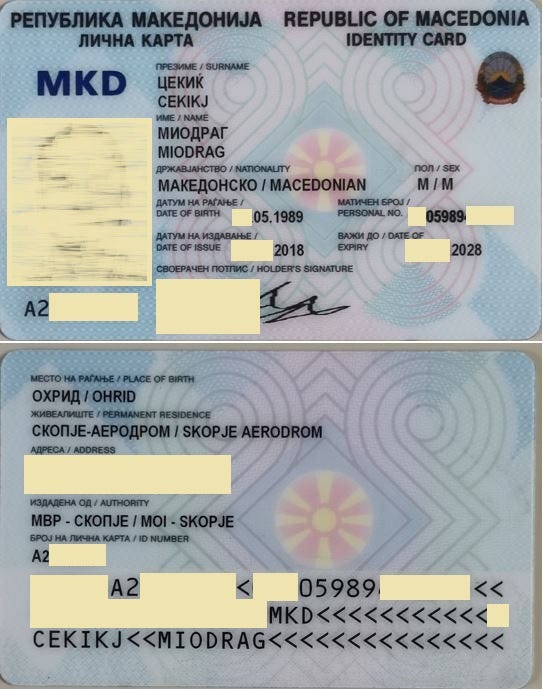

It’s time to introduce the Azure AI Document Intelligence instance that I will use for Optical Character Recognition (OCR) and extracting personal information from an official document (in this case, a personal ID).

What is Azure AI Document Intelligence?

It is what was previously widely known as Form Recognizer — an AI service that applies advanced machine learning to extract text, key-value pairs, tables, and structures from documents automatically and very accurately.

In general it provides powerful tools for organizations to automate and streamline document processing, reducing manual data entry, enhancing operational efficiency, and allowing employees to focus on higher-value tasks.

This instance comes packed with Document Intelligence Studio which is an online tool to visually explore, understand, train, and integrate features from the service itself. So, it`s very handy for initial prototyping and benchmark on different document designs and formats.

The Document Intelligence comes along with a wide variety of models that enable adding intelligent document processing workflows optimizations. In that sense, it supports different predetermined modes of work like Read (model for extracting print and handwritten text from PDF documents and scanned images), Layout (enables taking documents in various formats and return structured data representations of the documents), Prebuilt (prebuilt models enabling adding intelligent document processing to the apps and flows without having to train and build models from scratch), Custom Extraction (an advanced machine learning technology to identify documents, detect and extract information from forms and documents, and return the extracted data in a structured JSON output), and Custom Classification (deep-learning-model types that combine layout and language features to accurately detect and identify the documents).

Azure Function

I will combine the AI-powered document intelligence process with an Azure BLOB-triggered function that enables serverless execution after adding a new attachment in a blob container. Besides writing less code, Azure Functions can maintain less infrastructure and save on costs, especially for operation-centric processes where no additional cloud infrastructure is explicitly needed. They can also be used for building web APIs, responding to database changes, processing IoT streams, and managing message queues.

In this case, I will use the function implementation to encapsulate the business logic behind the document intelligence module. More precisely, I will configure the function to be triggered as soon as a new file is added to the blob container, and then initiate the document extraction process or OCR for reading the content.

Azure OpenAI Service

And now, we’ve come up to the Azure OpenAI Service, which I explained in detail in the Crafting Your Customized ChatGPT with Microsoft Azure OpenAI Service article (part of the series for designing, implementing, deploying and maintaining chat bot based cloud native solutions using the Azure AI Studio). It provides REST API access to OpenAI’s powerful language models including o1-preview, o1-mini, GPT-4o, GPT-4o mini, GPT-4 Turbo with Vision, GPT-4, GPT-3.5-Turbo, and Embeddings model series.

This wide range of models is what makes it so interesting and widely used. I will be using it as an addition to the document intelligence process for summarizing the extracted information and making it more human-like. The main idea is to introduce a post-processing module that will enhance the content and adjust it in a more understandable or explainable way.

For instance, in my case, it will use my personal information to make a short summary about me — who I am, where I live, what my hometown is, etc. In the end, I will preserve the adjusted content within another blob container on the same storage account used in the previous article for storing the real-time communication transcripts.

Time to give it a Try

Let me start this module by creating a local BLOB triggered Azure Function that will initiate the execution flow as soon as I add any file in the ‘intelli-docs’ blob container.

[FunctionName("DocumentExtractor")]

public async Task Run([BlobTrigger("intelli-docs/{name}", Connection = "docsintelliconnection")] BlobClient myBlob, string name, ILogger log)

{

var logLevel = "Information";

var dateTimeStamp = DateTime.UtcNow;

var blobUri = myBlob.Uri;

Console.WriteLine($"C# Blob trigger function Processed blob\n Name:{name} \n Size: {blobUri} Bytes");

log.LogInformation($"C# Blob trigger function Processed blob\n Name:{name} \n Size: {blobUri} Bytes");

string endpoint = DocumentIntelligenceConfiguration.Endpoint;

string key = DocumentIntelligenceConfiguration.Key;

AzureKeyCredential credential = new AzureKeyCredential(key);

// Azure Blob Storage configuration

string blobConnectionString = DocumentIntelligenceConfiguration.BlobStorageConnectionString;

string containerName = DocumentIntelligenceConfiguration.BlobStorageContainerName;

string blobName = DocumentIntelligenceConfiguration.BlobStorageBlobName;

DocumentAnalysisClient client = new DocumentAnalysisClient(new Uri(endpoint), credential);

Uri fileUri = new Uri(blobUri.ToString());

try

{

AnalyzeDocumentContent content = new AnalyzeDocumentContent()

{

UrlSource = fileUri

};

AnalyzeDocumentOperation operation = client.AnalyzeDocumentFromUri(WaitUntil.Completed, "prebuilt-read", fileUri);

Azure.AI.FormRecognizer.DocumentAnalysis.AnalyzeResult result = operation.Value;

var logDetails = new StringBuilder();

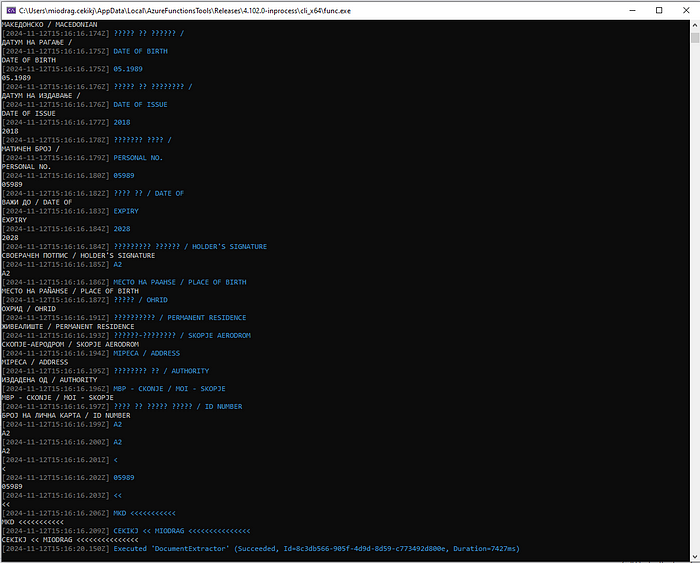

foreach (Azure.AI.FormRecognizer.DocumentAnalysis.DocumentLine extractedLineContent in result.Pages[0].Lines)

{

Console.OutputEncoding = Encoding.UTF8;

log.LogInformation($"{extractedLineContent.Content.ToString()}");

logDetails.AppendLine($"{logLevel}--[{dateTimeStamp}]-{extractedLineContent.Content.ToString()}");

Console.WriteLine($"{extractedLineContent.Content.ToString()}");

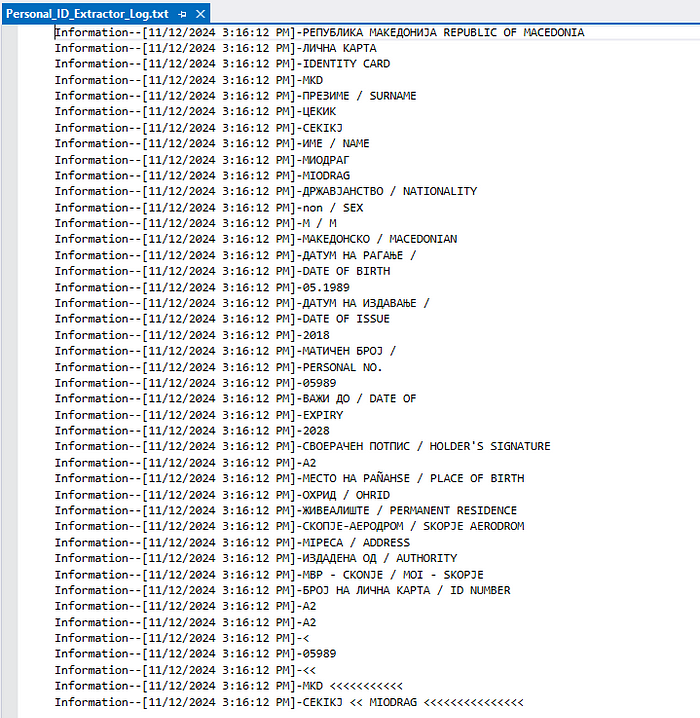

}

using (System.IO.StreamWriter file = new System.IO.StreamWriter(@"C:\Users\miodrag.cekikj\Desktop\DotNET Community\Real Time AI\solutions\working\RealTimeAI\DocumentIntelligence\Personal_ID_Extractor_Log.txt"))

{

file.WriteLine(logDetails.ToString()); // "sb" is the StringBuilder

}

await AppendToBlobStorage(blobConnectionString, containerName, blobName, await AzureOpenAIHelper.LLMSummarization(logDetails.ToString()));

}

catch (Exception ex)

{

throw;

}

}Here, I designed an Azure Function named “DocumentExtractor” to handle the automatic processing of documents uploaded to an Azure Blob Storage container. The function springs into action whenever a new blob appears in the specified container, leveraging the capabilities of Azure Functions and Blob Triggers. To start, I defined the function with the [FunctionName("DocumentExtractor")] attribute, clearly indicating its purpose. This function operates asynchronously, allowing it to handle tasks without blocking the main thread, ensuring efficiency. The function accepts three parameters: BlobClient myBlob, representing the triggering blob, string name, the name of the blob, and ILogger log, an interface for logging information.

With the function parameters in place, I set up some initial variables and determined the log level as “Information” while also capturing the current UTC timestamp and action. Then, I extracted the URI of the blob from the BlobClient object and stored it in the blobUri variable. To provide immediate feedback, I logged a message to both the console and the logger, indicating that a blob had been processed, including its name and URI.

Next, I retrieved essential configuration settings for the Document Intelligence service. These settings, stored in DocumentIntelligenceConfiguration, included the endpoint, API key, and Azure Blob Storage details. Using the API key, I created an AzureKeyCredential object, which I then used to instantiate a DocumentAnalysisClient.

This client served as my interface to the Document Intelligence service. I converted the blob’s URI into a Uri object named fileUri, preparing it for analysis. Entering a try block, I constructed an AnalyzeDocumentContent object and set its UrlSource property to fileUri. This object was then passed to the AnalyzeDocumentFromUri method of the client, initiating the analysis using the “prebuilt-read” model. This operation ran until completion, and the results were captured in an AnalyzeResult object.

The results contained pages of extracted document content. Considering the use case, I focused on the first page, iterating over each line of text, and for each line, I logged the content to both the console and the logger, and appended each log entry to a StringBuilder object named logDetails, which accumulated all the extracted content along with the log level and timestamp.

Note: Although the Personal ID card is official, it is not valid. The data provided here is for demonstration purposes only.

static async Task AppendToBlobStorage(string connectionString, string containerName, string blobName, string text)

{

var blobServiceClient = new BlobServiceClient(connectionString);

var containerClient = blobServiceClient.GetBlobContainerClient(containerName);

var blobClient = containerClient.GetBlobClient(blobName);

if (!await containerClient.ExistsAsync())

{

await containerClient.CreateAsync();

}

var appendText = Encoding.UTF8.GetBytes(text + Environment.NewLine);

if (await blobClient.ExistsAsync())

{

var existingBlob = await blobClient.DownloadContentAsync();

var existingContent = existingBlob.Value.Content.ToArray();

var combinedContent = new byte[existingContent.Length + appendText.Length];

Buffer.BlockCopy(existingContent, 0, combinedContent, 0, existingContent.Length);

Buffer.BlockCopy(appendText, 0, combinedContent, existingContent.Length, appendText.Length);

await blobClient.UploadAsync(new BinaryData(combinedContent), true);

}

else

{

await blobClient.UploadAsync(new BinaryData(appendText), true);

}

}

After processing the document, I wrote the accumulated log details to a local text file named “Personal_ID_Extractor_Log.txt”. As stated before, I have also implemented a method AzureOpenAIHelper.LLMSummarization to summarize the log details to enhance the overall functionality.

Finally, I appended this summary to a blob in Azure Blob Storage by calling the AppendToBlobStorage method, providing the necessary connection string, container name, and blob name. In the event of any exceptions, I had a catch block in place to rethrow them, ensuring that any errors were surfaced and could be addressed accordingly.

public class AzureOpenAIHelper

{

public async static Task<string> LLMSummarization(string extractedData)

{

Uri azureOpenAIEndpoint = new(DocumentIntelligenceConfiguration.AzureOpenAIEndpoint);

string azureOpenAIKey = DocumentIntelligenceConfiguration.AzureOpenAIKey;

string azureOpenAIModel = DocumentIntelligenceConfiguration.AzureOpenAIModel;

OpenAIClient client = new(azureOpenAIEndpoint, new AzureKeyCredential(azureOpenAIKey));

ChatCompletionsOptions chatCompletionsOptions = new();

string systemPrompt = "You are an expert in summarization, and you need to summarize the input data about a person in a natural way, " +

"including who they are, where they live, and other relevant information based on the provided data.";

AddMessageToChat(chatCompletionsOptions, systemPrompt, ChatRole.System);

AddMessageToChat(chatCompletionsOptions, extractedData, ChatRole.User);

ChatCompletions response = await client.GetChatCompletionsAsync(azureOpenAIModel, chatCompletionsOptions);

return response.Choices[0].Message.Content;

}

public static void AddMessageToChat(ChatCompletionsOptions options, string content, ChatRole role)

{

options.Messages.Add(new ChatMessage(role, content));

}

}Important Note: As explicitly stated within the Microsoft Azure documentation, I would like to take a moment to also advise keeping the keys/connection strings secure by defining them within a secrets configuration file, storing them as environment variables, or using services like Azure Key Vault.

In the development of my project, I implemented a helper function called LLMSummarization within the AzureOpenAIHelper class. This function was designed to leverage Azure’s OpenAI service to produce a summarized version of extracted data. The primary goal was to transform detailed information into a concise summary, presenting it in a natural and readable format.

To begin with, the LLMSummarization function was defined as an asynchronous static method, allowing it to perform its tasks without blocking the calling thread. The function accepted a single parameter, extractedData, which contained the detailed information that needed summarizing.

Within the function, I first established the necessary configuration settings by retrieving the Azure OpenAI endpoint, API key, and the specific model to be used from the DocumentIntelligenceConfiguration. Using these settings, I instantiated an OpenAIClient object, which served as the interface to interact with the Azure OpenAI service. I then set up the options for the chat completions by creating a ChatCompletionsOptions object.

To guide the summarization process, I crafted a system prompt that described the role of the summarization expert and the task at hand. This prompt was designed to instruct the AI to generate a natural summary of the input data, focusing on key details such as the individual’s identity, residence, and other relevant information.

To manage the messages for the chat completions, I created a helper method called AddMessageToChat. This method accepted three parameters: the ChatCompletionsOptions object, the message content, and the role (either System or User) and adding the provided message to the Messages collection within the ChatCompletionsOptions object. Using the AddMessageToChat method, I added the system prompt and the extracted data to the chat completions options, specifying their respective roles.

With the options configured, I called the GetChatCompletionsAsync method of the OpenAIClient, passing in the model and the chat completions options. This asynchronous call sent the request to the Azure OpenAI service, which processed the input and generated a response.

The response, stored in a ChatCompletions object, contained various choices. I extracted the summarized content from the first choice and returned it as the result of the LLMSummarization function. In essence, the LLMSummarization function demonstrated how to effectively use Azure’s OpenAI service to automate the summarization of detailed information.

By integrating this function into the pipeline, I was able to transform verbose data into concise summaries, enhancing the readability and usability of the information.

Recap

In conclusion, the integration of Azure’s Cognitive Services, including Document Intelligence and OpenAI, has enabled the creation of a robust, cloud-native solution for automated document processing.

By leveraging Azure Functions, I was able to build a serverless architecture that efficiently handles document uploads, performs optical character recognition, and generates human-readable summaries. This solution not only reduces the manual effort required for data extraction and processing but also enhances the overall accuracy and usability of the information.

The approach demonstrated in this article highlights the potential of combining AI services with cloud-native technologies to streamline workflows and improve operational efficiency. As we continue to explore and refine these capabilities, the possibilities for further enhancements and broader applications in various industries are both promising and exciting.

Next Station

And now, we are ready to move on to the next phase of our journey, where I will go into the capabilities of Azure AI Search, widely known as Azure Cognitive Search. This powerful service will act as the heart of our solution, encapsulating data from the centralized storage and transforming it into a format suitable for advanced chat-based interactions and intelligent search functionalities.

By integrating Azure AI Search, I aim to enhance the accessibility and relevance of the information, making it easier for users to find exactly what they need through intuitive and intelligent search experiences.