Leveling up existing RAG-based cloud-native solutions by using Azure AI Assistants

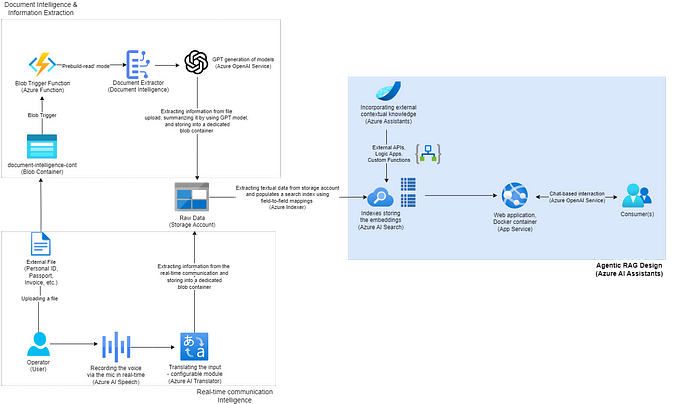

All right, we come up to the wrap up of this cloud-native intelligence series incorporating the Microsoft Azure AI Studio and Azure AI Services. The journey began with implementing the module for real-time speech recognition and translation where I developed a cloud-native solution that allows recording a conversation in real-time and saving the translated transcripts in a blob container on a storage account by using the Azure AI Speech and Azure AI Translator cognitive services.

The transcripts were saved in English, translated from the source language, which in this case was Macedonian. Next, I proceeded with building an intelligent document processing pipeline, introducing another cognitive service, Document Intelligence, for Optical Character Recognition (OCR) and extracting personal information from a personal ID document. With this data in mind, I demonstrated how we can leverage the Azure AI Search service to intelligently index data, optimize retrieval processes, and boost efficiency. Now, we’re at the point where we’re ready to do something with the data and even enhance it by introducing more use case-specific data and creating a human-like way of interacting with it.

Azure OpenAI Studio in the picture

At the end of last year, I started another technical series about building a customized ChatGPT-like solution with Microsoft Azure OpenAI Service. I began with a step-by-step introductory guide for creating an Azure cloud-native Generative AI solution.

Then, I proceeded to explain in detail the Chat Playground by utilizing the GPT-3.5-turbo and GPT-4 models, concluding with the deployment and integration part from the studio itself and by using the C#/.NET SDK. In these articles, I processed microbiome data specialized for bioengineering academic insights using cross-references and covered the approach of creating indexes and using embedding models directly in the Azure OpenAI Chat Playground. The same design approach is applicable here and can be used for creating such an instance on top of the data we already have in the centralized blob storage.

However, the purpose of this article is to take this approach to the next level, evolving from a RAG-based cloud-native solution to an assistant-powered solution that can also introduce additional contextual content and knowledge into the system.

What are AI Assistants?

Azure AI Assistants elevate functionality through custom instructions and are augmented by advanced tools like code interpreters and custom functions. But, as I’ve mentioned previously, there’s a common misconception that they operate autonomously simply because they can use Large Language Models (LLMs) to call functions or follow custom instructions. It’s worth mentioning once again that this isn’t entirely true.

While LLMs can perform tasks and make decisions without direct human intervention, the actual function-calling is orchestrated by external code or systems, not by the LLMs themselves.

Note: GitHub repository for creating Azure AI Assistant and showcasing how the function calling is done behind the scenes by using the .NET/C# SDK is available here.

Thus, before starting things off, I want to emphasize that I prefer the term “structured output” over “function calling” to avoid misunderstandings. Agents leveraging LLMs rely on function descriptions and can identify when additional information or context is needed. They recognize these needs, but it’s up to the underlying code or application to execute the functions and provide the results back to the LLM for creating a response.

So, while the LLM determines when functions should be called based on context and intent, the actual execution is handled externally (literally in the code behind).

Azure AI Assistants

Azure AI Assistants are now integral part of the Azure OpenAI Studio playground (represented by the Assistants Playground), technically still in preview, but already exposing top-notch features like File Search and Code Interpreter tools and Custom Functions with custom methods and Logic Apps platform integration. In their general form, they are an innovative suite of tools and services developed by Microsoft to enhance productivity and streamline workflows using the latest advancements in Generative Artificial Intelligence (GenAI).

By definition, the assistants are designed to integrate seamlessly with various Microsoft and third-party applications, providing users with intelligent, context-aware support and offering personalized recommendations, thereby enhancing user efficiency and decision-making capabilities.

Note: As of now, Azure AI Assistants are in the Preview stage, which means they are available for early access and testing by users who want to explore their capabilities before the official release. The Preview stage is a crucial step in ensuring that Azure AI Assistants meet the high standards of reliability, performance, and user satisfaction that Microsoft Azure team aims to deliver.

Level-up the RAG-based Approach

It’s time to put everything together and design Agentic RAG behavior using the Azure AI Assistants Playground.

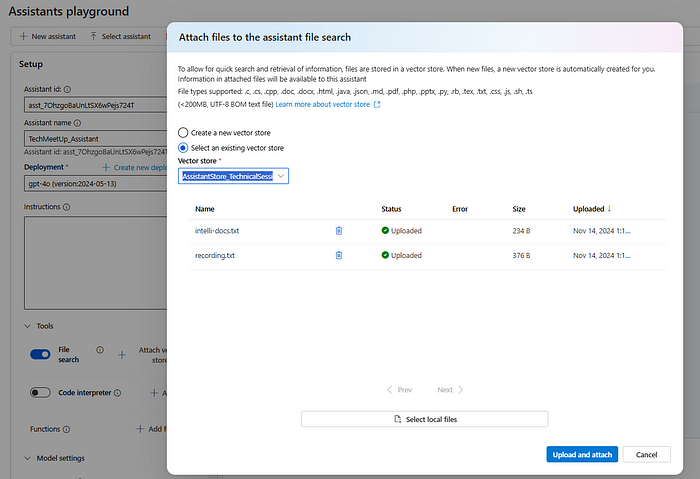

Vector Stores

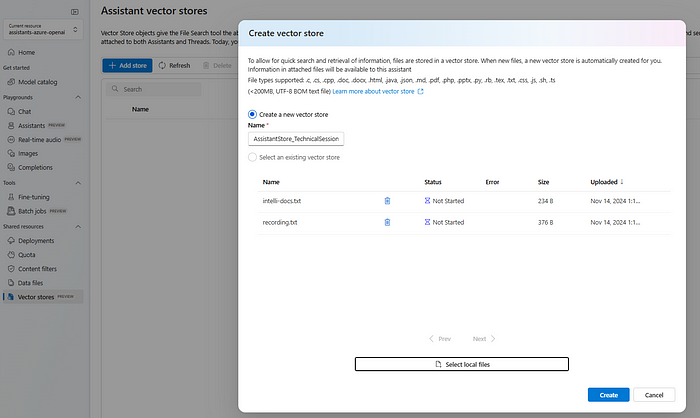

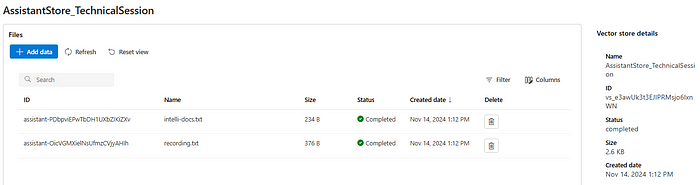

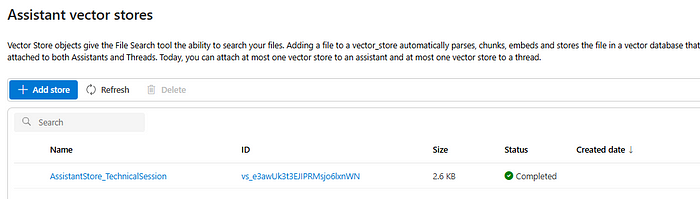

Let’s first start by creating a vector store, basically the same index attachment process we have when working in the Chat Playground and building RAG-based solutions on user-specific (confidential) data. In terms of the assistants, the vector store objects give the ‘File Search’ tool the ability to search through files — automatically parsing, chunking, embedding, and storing the file(s) in a vector database capable of both keyword and semantic search.

Behind the scenes is the indexing process already described in the previous article related to intelligently indexing data, optimizing retrieval processes, and boosting efficiency with Azure AI Search Service. For our use case, let’s create a vector store object containing the recordings and document extraction files (coming from the up-to-date blob storage centralized location).

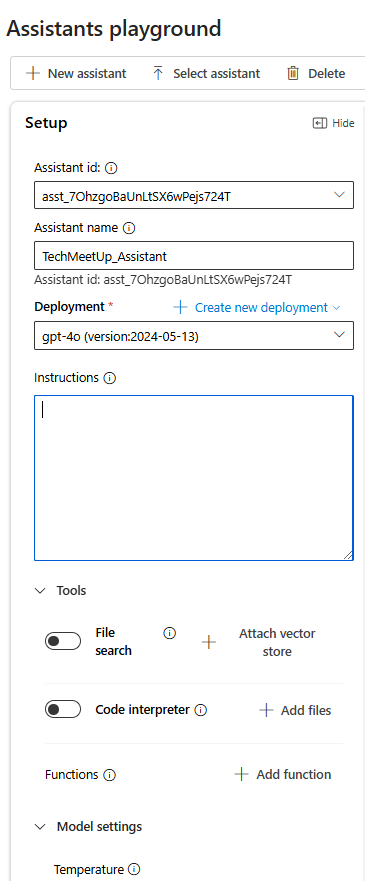

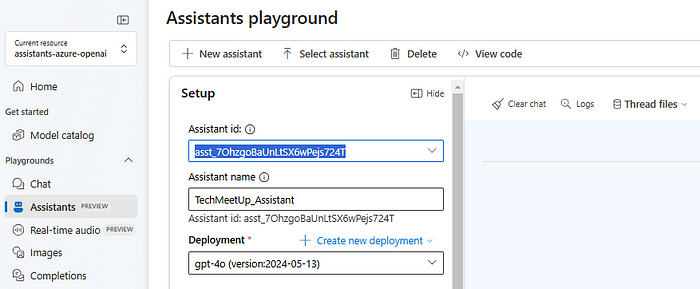

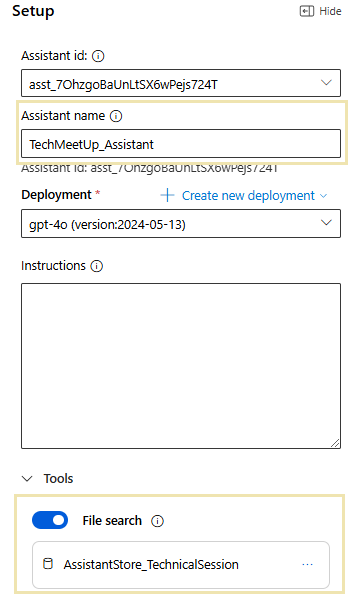

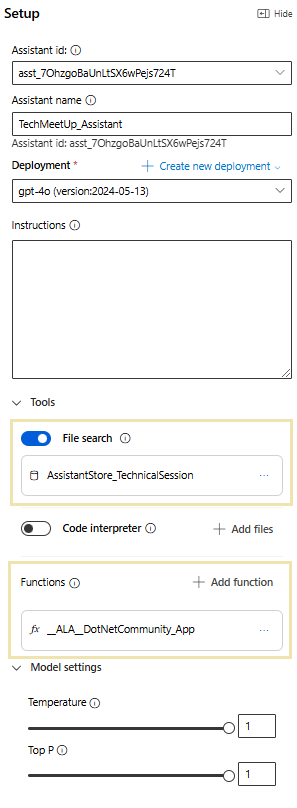

Now that we have the vector store, or the data itself, let’s continue the process and configure the assistant in the Azure AI Assistants Playground. As with the data stores, this playground is still in preview, but it offers a lot of features and integration that bring RAG-based systems to the next level.

Assistants Playground

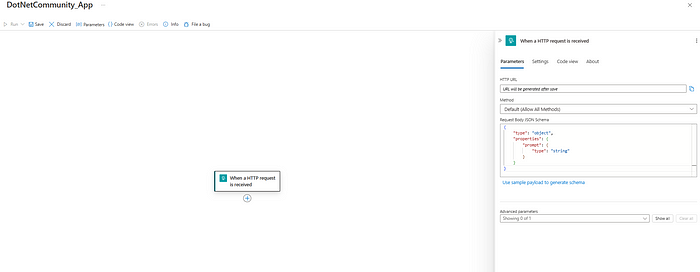

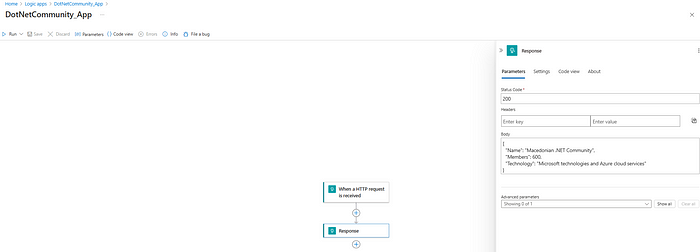

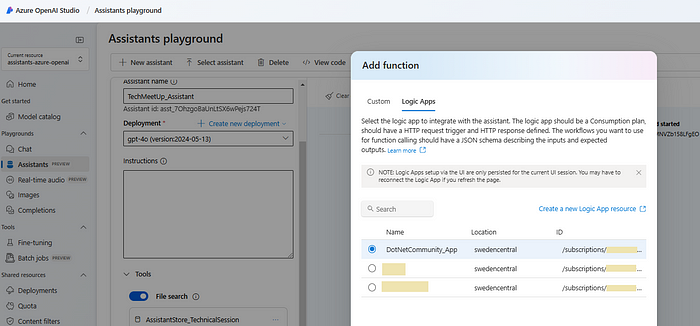

So far, I have connected the assistant with the ‘File Search’ contextual knowledge coming from the previously described AI Services. In this sense, it basically represents the standard RAG-based generative artificial intelligence design. Besides the tools, I will also introduce the configuration of Functions, where I will add Logic App. Azure Logic Apps is a cloud-based service that enables users to automate workflows, integrate various applications, and manage data between different systems without writing extensive code. It provides a visual designer where we can create workflows by connecting different services, APIs, and platforms. So, in a general sense, let’s consider it as an external API integration or an additional knowledge point to the system.

Logic Apps Integration

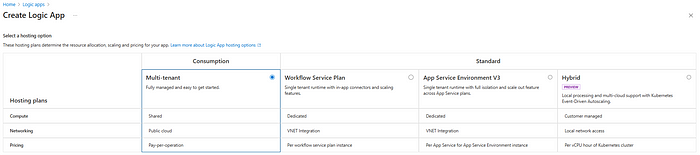

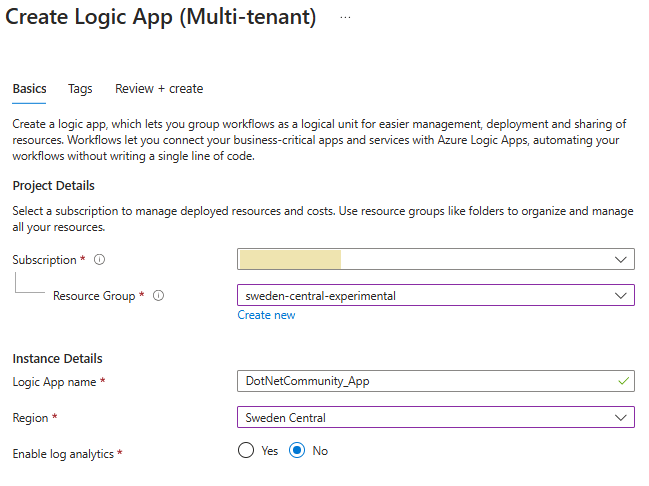

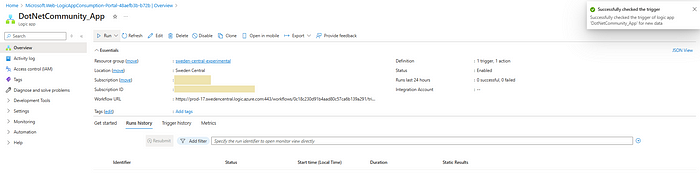

Note: For now, in order to be visible and ready for integration, the Logic App should be created with the Consumption hosting option within the same resource group and region as the Azure OpenAI instance.

Now, besides the blob storage knowledge, we also have an external function containing ‘information’ about the stakeholder from the technical meetup use case.

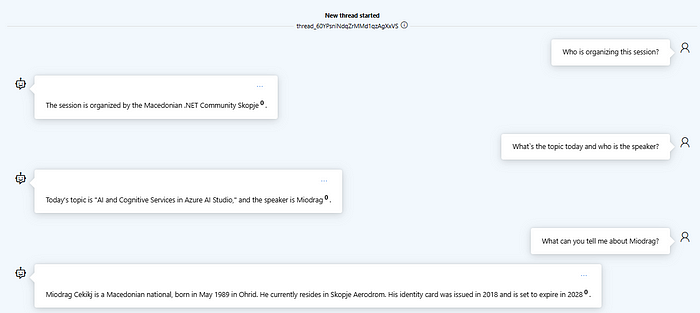

Let`s give it a try

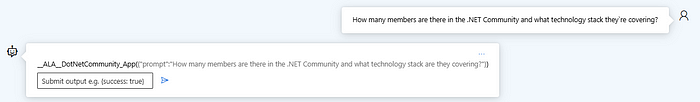

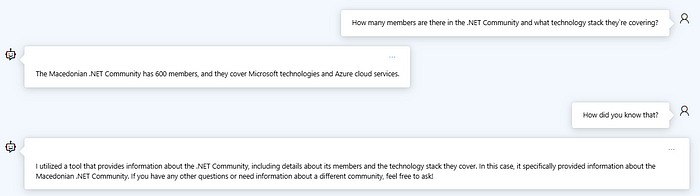

Voila! We can see that it is able to provide responses based on the knowledge within the vector store, as well as recognize actionable endpoints (the Azure Logic App) from where it can potentially fetch additional data or will try to find additional data that would be relevant to the user query itself.

To Sum It All Up

What we’ve just achieved is a level-up RAG, or agentic RAG designed around the concept of Azure AI Assistants. It is actionable by nature, but the execution is done behind the scenes or in the code behind. Therefore, the main benefit of using AI assistants lies in the seamless integration of advanced cloud infrastructure and enterprise-grade AI tools, which significantly enhance scalability, accuracy, and operational efficiency.

In this manner, RAG-based solutions gain enhanced data retrieval capabilities, allowing access to and processing vast amounts of structured and unstructured data in real time. Additionally, Azure’s enterprise-grade security, compliance features, and hybrid-cloud capabilities provide a secure environment for deploying and managing Gen AI RAG solutions at scale, which is crucial for organizations handling sensitive data.

Thank you for dedicating your time to exploring this series. I hope it has been both enlightening and inspiring for you. Your thoughts and feedback are incredibly valuable, so don’t hesitate to share your suggestions and perspectives. Let’s ignite a conversation and make this journey even more enriching together! If you found the content beneficial, please give it a clap, and share it with others. Your support means the world to me!