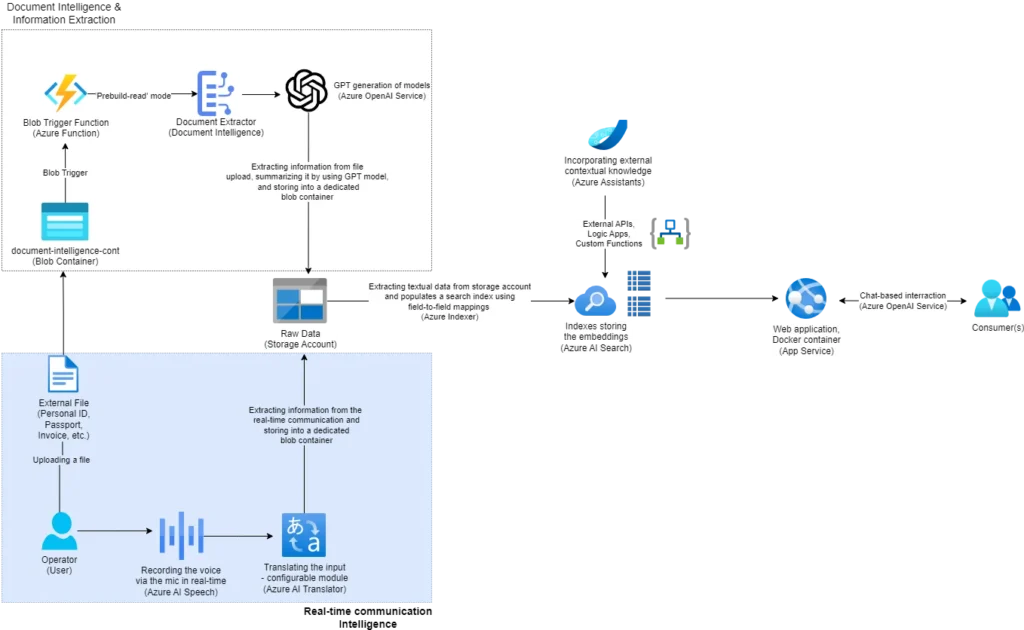

Implementing Real-Time Speech Recognition, Translation, and Data Storage Using Azure Cognitive Services

I get the impression that we are living in one of the most exciting and, at the same time, dramatic periods of technological development. By nature, I perceive myself as an analytical type and an enthusiast, especially in the field of applied artificial intelligence in industry. While I will write about my analytical spirit and the information I analyze on a future occasion, for now, I want to focus on applied artificial intelligence and the cognitive services it drives in real-world scenarios from everyday life. I want to emphasize the modularity of democratization and how cloud-based platforms bring both abstraction and power into our hands.

I am eager to share a broader picture in a series of posts related to artificial intelligence trends and cognitive services, specifically those offered by Microsoft Azure. In the grand scheme of things, discussing large language models and the creation of GPT-like systems is expected because it has become much more than just a technological trend. Therefore, I plan to cover that topic in a wider context. I want to start with some of the AI-based services available through Azure. By available, I mean not only in terms of access and geographical spread but also a fair pricing model with high performance and scalability options. Additionally, I would highlight the intuitive usage, as well as SDK and REST support for full integration into existing platforms and infrastructures across a wide range of technologies.

I will begin by introducing Azure AI Speech and Azure AI Translator and how we can use them for automatic speech recognition and real-time translation. More specifically, the main goal will be to develop a cloud-native solution that allows recording a conversation in real-time and saving the transcripts in a blob container on a storage account. The transcripts will be saved in English, translated from the source language, which in this case will be my native Macedonian. Let’s roll up our sleeves and get started.

Azure AI Speech

The Azure AI Speech is part of Microsoft’s Azure AI Services (aka Cognitive Services) designed to enable real-time and batch speech processing capabilities in applications. This service offers a comprehensive suite of tools that make it easy to integrate sophisticated speech to text and text to speech functionalities for transcribing speech to text with high accuracy, produce natural-sounding text to speech voices, translate spoken audio, and use speaker recognition during conversations.

What I particularly like about the speech to text is the dedicated Speech Studio. It provides the possibility to quickly test audio on a speech recognition endpoint without writing any code. This tool is inbuilt and natively provided by Azure, complete with documentation, code samples, and CLI guidance. On the other hand, the text-to-speech feature offers the ability to convert input text into human-like synthesized speech, including the use of neural voices, which are human-like voices powered by deep neural networks. Additionally, Azure AI Speech includes a Speaker Recognition module that provides algorithms to verify and identify speakers by their unique voice characteristics. It also supports a wide range of languages per feature, ensuring that language identification is perfectly aligned with different application needs.

I will be using the real-time speech-to-text functionality to identify what I will be speaking about using the default PC microphone. For this purpose, I will utilize the natively supported .NET/C# SDK. Besides C#, the SDK supports multiple programming languages, including C++, Go, Java, JavaScript, Objective-C, Python, and Swift.

Azure AI Translator

Let’s continue with Azure AI Translator, a cloud-based neural machine translation service that is part of the Azure AI services family and can be used with any operating system. In a general sense, it provides the ability to translate text or speech between multiple languages in real-time, and I will be using it along with the speech service for real-time transcription and translation. It is worth mentioning that besides real-time speech translation, it also offers the possibility for document translation and customizable, domain-specific translation tailored to the preferred language style or terminology of a specific industry or business domain.

The language support depends on the feature, so as with the previous service, I would initially recommend checking the features table and documentation beforehand.

Wrapping Speech and Translator Together

Let’s see now how we can use both cognitive services together to provide a real-time service for identifying speech, extracting the transcription, and translating it before sending it to the centralized blob storage location.

Let’s first set up the keys, endpoints, and connection strings needed for accessing the Azure AI Speech, Azure AI Translator, and Azure Storage Account instances. Their creation is very well documented in the official documentation, which I have linked within each section before.

Important Note: As explicitly stated within the documentation, I would like to take a moment to also advise keeping the keys/connection strings secure by defining them within a secrets configuration file, storing them as environment variables, or using services like Azure Key Vault.

//Azure Speech Service configuration string speechKey = AIServicesConfiguration.AzureAISpeechKey; string serviceRegion = AIServicesConfiguration.AzureAISpeechServiceRegion;

// Azure Translator configuration string translatorKey = AIServicesConfiguration.AzureAITranslatorKey; string endpoint = AIServicesConfiguration.AzureAITranslatorEndpoint; string region = AIServicesConfiguration.AzureAITranslatorRegion; // Azure Blob Storage configuration string blobConnectionString = AIServicesConfiguration.BlobStorageConnectionString; string containerName = AIServicesConfiguration.BlobStorageContainerName; string blobName = AIServicesConfiguration.BlobStorageBlobName;

The only additional configuration for this specific case is setting up the speech recognition service to identify the Macedonian language from the default device microphone.

var speechConfig = SpeechConfig.FromSubscription(speechKey, serviceRegion); speechConfig.SpeechRecognitionLanguage = "mk-MK"; var audioConfig = AudioConfig.FromDefaultMicrophoneInput();

var recognizer = new SpeechRecognizer(speechConfig, audioConfig);

And that’s basically it in terms of initialization and configuration. Now we are ready to implement the logic for the real-time identification, transcription, translation, and preservation flow.

recognizer.Recognized += async (s, e) =>

{

if (e.Result.Reason == ResultReason.RecognizedSpeech)

{

Console.WriteLine($"RECOGNIZED: {e.Result.Text}");

string translatedText = await TranslateText(translatorKey, endpoint, e.Result.Text, "en", region);

await AppendToBlobStorage(blobConnectionString, containerName, blobName, translatedText);

}

};await recognizer.StartContinuousRecognitionAsync().ConfigureAwait(false); await recognizer.StopContinuousRecognitionAsync().ConfigureAwait(false);

In this section of the code, the Recognized event of the SpeechRecognizer is used to process recognized speech in a detailed and structured manner. The event is subscribed to with an asynchronous event handler, which means it will handle speech recognition results as they are received. The first step inside this handler is to check if the reason for the recognized result is RecognizedSpeech, indicating that the speech was successfully recognized by the service.

If the speech is successfully recognized, the handler proceeds to print the recognized text to the console, providing immediate feedback about what was understood. This can be particularly useful for debugging or real-time monitoring purposes.

Next, the handler calls an asynchronous method named TranslateText. This method is designed to translate the recognized text into English. It requires several parameters: the translator key, endpoint, the text to be translated, the target language (“en” for English), and the region. By calling this method, the code leverages the Azure Translator service to convert the recognized Macedonian speech into English text.

Once the translation is complete, the translated text needs to be stored for future use or reference. This is accomplished by calling another asynchronous method named AppendToBlobStorage. This method appends the translated text to a blob in Azure Blob Storage. It takes several parameters as well: the blob connection string, the name of the container where the blob resides, the name of the blob itself, and the translated text to be stored. This ensures that the translated speech is not only recognized and translated but also persistently stored for later retrieval or analysis.

Now, let`s drill down the Translate Text method which is taking care about the translation of the text identified before.

public static async Task<string> TranslateText(string subscriptionKey, string endpoint, string text, string targetLanguage, string region)

{

AzureKeyCredential credential = new(subscriptionKey);

TextTranslationClient client = new(credential, region);

try

{

Response<IReadOnlyList<TranslatedTextItem>> response = await client.TranslateAsync(targetLanguage, text).ConfigureAwait(false);

IReadOnlyList<TranslatedTextItem> translations = response.Value;

TranslatedTextItem translation = translations.FirstOrDefault();

Console.WriteLine($"Detected languages of the input text: {translation?.DetectedLanguage?.Language} with score: {translation?.DetectedLanguage?.Score}.");

Console.WriteLine($"Text was translated to: '{translation?.Translations?.FirstOrDefault().To}' and the result is: '{translation?.Translations?.FirstOrDefault()?.Text}'.");

return translation?.Translations?.FirstOrDefault()?.Text;

}

catch (RequestFailedException exception)

{

Console.WriteLine($"Error Code: {exception.ErrorCode}");

Console.WriteLine($"Message: {exception.Message}");

}

return null;

}In the context of the previous code logic, the TranslateText method is designed to translate recognized speech into a target language, specifically English in this case. It is an asynchronous method that takes parameters for the subscription key, endpoint, text to be translated, target language, and region.

The method starts by creating a TextTranslationClient using the provided subscription key and region. Inside a try-catch block, it calls the TranslateAsync method of the client to translate the provided text into the target language. The translation results are received as a response, which contains a list of TranslatedTextItem objects.

The method extracts the first translation item from the list, which includes the detected language and the translated text. For informational purposes, it prints out the detected language and its confidence score, as well as the translated text. Finally, the translated text is returned. If any exceptions occur during the translation process, they are caught and handled by printing error details to the console, and the method returns null.

Lastly, we have the logic for appending the translated text to the centralized blob storage location.

static async Task AppendToBlobStorage(string connectionString, string containerName, string blobName, string text)

{

var blobServiceClient = new BlobServiceClient(connectionString);

var containerClient = blobServiceClient.GetBlobContainerClient(containerName);

var blobClient = containerClient.GetBlobClient(blobName);

if (!await containerClient.ExistsAsync())

{

await containerClient.CreateAsync();

}

var appendText = Encoding.UTF8.GetBytes(text + Environment.NewLine);

if (await blobClient.ExistsAsync())

{

var existingBlob = await blobClient.DownloadContentAsync();

var existingContent = existingBlob.Value.Content.ToArray();

var combinedContent = new byte[existingContent.Length + appendText.Length];

Buffer.BlockCopy(existingContent, 0, combinedContent, 0, existingContent.Length);

Buffer.BlockCopy(appendText, 0, combinedContent, existingContent.Length, appendText.Length);

await blobClient.UploadAsync(new BinaryData(combinedContent), true);

}

else

{

await blobClient.UploadAsync(new BinaryData(appendText), true);

}

}The AppendToBlobStorage method is designed to append text to a specified blob within an Azure Blob Storage container. It is an asynchronous method that takes four parameters: the connection string for the storage account, the container name, the blob name, and the text to be appended.

The method begins by creating a BlobServiceClient using the provided connection string, which allows it to interact with the Azure Blob Storage service. It then retrieves a BlobContainerClient for the specified container and a BlobClient for the specified blob within that container.

The method first checks if the container exists using the ExistsAsync method. If the container does not exist, it creates the container by calling CreateAsync. This ensures that the container is available before attempting to append text to the blob.

Next, the text to be appended is converted to a byte array using UTF-8 encoding, and a newline character is added to ensure each appended text appears on a new line.The method then checks if the blob already exists using the ExistsAsync method. If the blob exists, the method downloads the current content of the blob using the DownloadContentAsync method. It then combines the existing content with the new text by copying the byte arrays into a single combined array.

The combined content is then uploaded back to the blob using the UploadAsync method, with the overwrite parameter set to true to replace the existing content.If the blob does not exist, the method simply uploads the new text as the initial content of the blob using the UploadAsync method.

Time to give it a Try

All right, everything is set up, so it’s time to say something and check the dedicated blob storage for the transcription.

There we are, we have successful integration between the speech and translation services on Azure. I also want to emphasize the need for clear speech input in terms of grammar (narrative correctness) as well as a noise-free environment in order to get the most out of it. There is also space for enhancement by sending the content to an LLM for summarization and content enrichment, but I will showcase that in a follow-up article where I’m going to present the Document Intelligence service.

What`s Next

So, let’s say this is a solid baseline solution for real-time recording and translation, preserving the transcription in a centralized storage location in the cloud. The next component is the document intelligence part, or automatic optical character recognition (OCR) module, which will enhance the solution by extending the reference knowledge data with information coming from files (images, scanned documents, etc.). To achieve that, let’s explore another AI service from the Microsoft AI Portfolio, the Document Intelligence service (also known as Form Recognizer).